In the previous article, we already considered the question of “Can we use RAID on SSDs” using the example of Kingston drives, but we did this only within the framework of the zero level. In this article, we will analyze the options for using professional and home NVMe solutions in the most popular types of RAID arrays and talk about the compatibility of Broadcom controllers with Kingston drives.

⇡#A little about RAID 0 from SSD

When talking about improving the performance of the disk subsystem, we primarily mean RAID level 0 arrays, assembled from two drives, as the simplest and most common. It is precisely such arrays that make the most sense from the point of view of maximizing performance. By dividing data into blocks of a fixed length and interleaving disks for storing them, a multiple (in theory) increase in performance is achieved. However, this reduces the reliability of information storage, since the failure of at least one disk leads to the loss of all data. The total capacity of a RAID 0 array is equal to the sum of the volumes of all drives included in it, and to create it you can use two, three disks or more. Due to its obvious performance scaling and lack of capacity losses, RAID 0 continues to be the most popular RAID array option.

RAID 0 arrays are supported by most mid- and high-end motherboards. However, we must keep in mind that the best choice for creating RAID 0 from an SSD will be motherboards based on the latest generation Intel chipsets. The advantages of Intel H87, B87 and Z87 are that they support more than two SATA 6 Gb/s ports on one side, and on the other, they run the advanced Intel Rapid Storage Technology (RST) driver. This driver is specially optimized for RAID 0 arrays of SSDs and offers unique features today: support for the TRIM command and direct access to drive diagnostic and maintenance utilities for all disks included in the array. No other controllers currently offer this functionality. On other platforms, the RAID array will be presented in the system as a virtual physical disk without the possibility of any access to the SSDs included in it.

This means that when building a RAID 0 array using the built-in controller of Intel 8-series chipsets, you don't have to worry about SSD performance degradation as they move from fresh to used state. In addition, the ability to monitor the physical state of the drives included in the array is not lost, which, in fact, is of great practical importance. As already mentioned, one of the most unpleasant features of a striped array is its lower reliability than that of a single SSD: the failure of one of the drives leads to the loss of the entire array. Modern flash drives have extensive self-diagnostic tools: the set of SMART parameters they report allows you to monitor their life cycle and health with a good degree of reliability. Therefore, the ability provided by Intel RST to access the SMART of the drives included in the array is very useful both for preventing failures and data loss, and for simple complacency.

RAID 5 based on Kingston SSD and Broadcom controllers

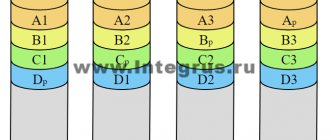

To organize a level 5 RAID array, we need at least three drives, the data on which is interleaved (cyclically written to all drives in the array), but not duplicated. When organizing them, one should take into account their more complex structure, since here the concept of “checksum” (or “parity”) appears. This concept means the logical algebraic function XOR (also exclusive “OR”), which dictates the use of at least three drives in the array (maximum 32). In this case, parity information is written to all “disks” in the array.

For an array of four Kingston DC500R SATA SSD drives with a capacity of 3.84 TB each, we get 11.52 TB of space and 3.84 for checksums. And if we combine 16 Kingston DC1000M U.2 NVMe drives with a capacity of 7.68 TB into RAID level 5, we will get 115.2 TB with a loss of 7.68 TB. As you can see, the more drives, the better in the end. It’s also better because the more drives in RAID 5, the higher the overall performance for write operations. And linear read will reach RAID 0 level.

A RAID 5 disk group provides high throughput (especially for large files) and redundancy with minimal power loss. This type of array organization is best suited for networks that perform many small input/output (I/O) operations simultaneously. But you shouldn’t use it for tasks that require a large number of write operations on small or small blocks. There is one more nuance: if at least one of the NVMe drives fails, RAID 5 goes into degradation mode and the failure of another storage device can become critical for all data. If one drive in the array fails, the RAID controller uses parity information to recreate all the missing data.

⇡#Choose SSD for RAID: Kingston HyperX 3K

Given the characteristics of striped arrays, the most logical choice for them is proven and stable SSDs, from which you can not expect any unpleasant surprises. Unfortunately, there are not too many such options. Even if the state of the flash memory used is continuously monitored via SMART, no one is immune from SSD failures caused by errors in controllers and firmware. Therefore, we recommend choosing solid-state drive models for RAID arrays that have been on the market for a long time, during which users could verify their reliability in practice, and manufacturers had the opportunity to correct all identified problems.

And, surprisingly, drives built on controllers from the SandForce family can be a good option here. These models, without a doubt, have been tested by a large army of their owners, and all the childhood diseases inherent in them have long been successfully cured both at the software and hardware levels. Moreover, flash drives with SF-2281 controllers have two more important advantages. Firstly, the set of SMART parameters in such models is very detailed and greatly exceeds the SMART of other SSDs, allowing you to get a thorough picture of the state of the flash memory. Secondly, SandForce drives have a powerful set of technologies (in particular, DuraWrite and RAISE) aimed at extending the life cycle of flash memory. Therefore, in terms of reliability among consumer SSDs, they can be considered one of the best options.

We should not forget about the price aspect. Solid state drives on SandForce controllers are now cheaper than ever, which greatly increases their attractiveness. Of course, their performance is far from the leading level, but for striped RAID arrays, high-speed SSDs are not so necessary. In such configurations, the SATA controller of the system logic set and the very principles of operation of RAID arrays are primarily responsible for high performance.

When choosing among numerous suppliers of SSDs based on the SF-2281 controller, you should obviously choose the largest and most reputable ones. Some will probably prefer Intel SSDs, but we liked the Kingston HyperX 3K flash drives, which are often a little cheaper. It was with them that we carried out our experiments.

Kingston HyperX 3K series drives are typical solutions based on second generation SandForce controllers. We already encountered them more than a year ago, but a lot has changed since our last acquaintance. Namely, the 25-nm flash memory produced by the IMFT consortium, which was previously installed in them, has been relegated to the rare category, so Kingston now uses completely different memory - 19-nm MLC NAND with the Toggle Mode interface from Toshiba. It must be said that the replacement of flash memory took place without any announcements, despite the fact that the performance indicators stated in the specifications also changed somewhat.

But Kingston HyperX 3K drives look exactly the same today as they did a year and a half ago:

Flash drives in this series have retained their attractive appearance and high build quality. The insides of the updated Kingston HyperX 3K models differ from previously used boards both in the set of flash memory chips and in color.

Kingston HyperX 3K 240 GB

Kingston HyperX 3K 480 GB

The specifications of the Kingston HyperX 3K models with a capacity of 240 and 480 GB with Toshiba flash memory participating in the tests are shown in the following table:

| Manufacturer | Kingston | |

| Series | HyperX 3K | |

| Model number | SH103S3/240G | SH103S3/480G |

| Form factor | 2.5 inches | |

| Interface | SATA 6 Gb/s | |

| Capacity | 240 GB | 480 GB |

| Configuration | ||

| Memory chips: type, interface, process technology, manufacturer | MLC, Toggle Mode DDR, 19 nm, Toshiba | |

| Memory chips: number/number of NAND devices per chip | 16/2 | 16/4 |

| Controller | SandForce SF-2281 | |

| Buffer: type, volume | No | No |

| Performance | ||

| Max. sustained sequential read speed | 555 MB/s | 540 MB/s |

| Max. sustained sequential write speed | 510 MB/s | 450 MB/s |

| Max. random read speed (4 KB blocks) | 86000 IOPS | 74000 IOPS |

| Max. random write speed (4 KB blocks) | 73000 IOPS | 32000 IOPS |

| physical characteristics | ||

| Power Consumption: Idle/Read-Write | 0.455 W/2.11 W | |

| Impact resistance | 20 g | |

| MTBF (mean time between failures) | 1 million hour | |

| TBW (total bytes written) | 192 TB | 384 TB |

| AFR (annualized failure rate) | ND | |

| Overall dimensions: LxHxD | 100x69.85x9.5 mm | |

| Weight | ND | |

| Guarantee period | 3 years | |

| Average retail price, rub. | ||

Please note that the 480 GB Kingston HyperX 3K model is noticeably slower than the 240 GB version. The SF-2281 controller demonstrates the highest performance when interleaving NAND devices four times in each channel, but the eight-time interleaving required to obtain 480 GB of capacity introduces some delays. The use of flash memory with a Toggle Mode interface only exacerbated this effect. If the 240 GB Kingston HyperX 3K model, based on the specifications, became slightly faster than its predecessor with Intel memory, then the 480 GB modification lost its performance.

RAID from SSD - a godsend or nonsense?

Table of contents

- Introduction

- Test participants

- Summary table of technical characteristics

- Test stand and testing methodology

- Configuring RAID

- Testing in classic benchmarks

- Crystal Disk Mark

- PCMark 7

- Intel NAS Performance Toolkit

- FC-test

- Practical scenarios

- Program installation speed

- Program loading speed

Introduction

Everyone knows SSDs are great.

Many also believe that RAID arrays are the key to high performance. Have you ever wanted to build a RAID from an SSD? Or maybe they were wondering what would be more profitable: purchasing one large disk, or organizing the joint work of several small ones? This material should help you make your choice.

Test participants

There will be no new drives this time. All of them have already contributed to earlier articles. The only difference is their quantity.

OCZ Vertex 3 Max IOPS, 128 GB

announcements and advertising

2080 Super Gigabyte Gaming OC for 60 rubles.

Compeo.ru - the right comp store without any tricks

RTX 2060 becomes cheaper before the arrival of 3xxx

Ryzen 4000

series included in computers already in Citylink

The price of MSI RTX 2070 has collapsed after the announcement of RTX 3xxx

Core i9 10 series is half the price of the same 9 series

The price of memory has been halved in Regard - it’s more expensive everywhere

The single Vertex 3 Max IOPS was tested in the Vertex 4 review, where it performs as a mid-ranger in the 128GB weight class.

The firmware was updated to version 2.22 before testing in arrays. By the way, CrystalDiskInfo 5.0 has learned to see the parameters of disks inside a RAID.

Crucial M4, 64 GB

This SSD was featured in an article about Plextor drives and proved to be a very fast drive for its size. The main task is to check how an array of small disks can cope with one large one.

The latest available firmware was used, namely 000F.

WD Caviar Blue, 500 GB

This veteran, in the full sense of the word, was tested in a review of caching SSDs, and we became acquainted with the AAKX line back in 2010. Despite the fact that Western Digital is already mastering terabyte “pancakes” with might and main, this hard drive has not yet been discontinued. The age of the “hard drives” people use is up to ten years old, many do not change them until they break down, so it can be argued that a two-year-old model will be faster than the average disk. If this is your case, you can estimate how much faster SSDs will be.

The SMART values have “grown up” since our last acquaintance, however, the drive is in good condition.

Summary table of technical characteristics

| Model | OCZ Vertex 3 Max IOPS | Crucial M4 | WD Caviar Blue |

| Model number | VTX3MI-25SAT3-120G | CT064M4SSD2 | WD5000AAKX-001CA0 |

| Volume, GB | 120 | 64 | 500 |

| Form factor | 2.5” | 2.5” | 3.5” |

| Interface | SATA-III | SATA-III | SATA-III |

| Firmware version | 2.15 | 0309 | 15.01H15 |

| Device | Controller SandForce SF-2281 + Toshiba 34 nm sync. Toggle Mode FLASH | Controller Marvell 88SS9174 + Micron 25 nm sync. ONFI FLASH | 1 platter 500 GB, 7200 rpm + 2 heads |

| Cache, MB | No | 128 | 16 |

Test stand and testing methodology

Test stand:

- Motherboard: ASRock Z68 Extreme7 Gen3 (BIOS 1.30);

- Processor: Intel Core i7-2600K, 4.8 GHz (100 x 48);

- Cooling system: GELID Tranquillo Rev.2;

- RAM: G.SKILL Ripjaws Z, F3-17000CL9Q-16GBZH (1866 MHz, 8-10-9-26 1N) 2×4 GB;

- Hard drive: WD Caviar Blue, WD3200AAKX-001CA0, 320 GB;

- Video card: ASUS GTX 580 DirectCu II, 1.5 GB GDDR5;

- Power supply: Hipro HP-D6301AW, 630 W.

The system boot process and in-game videos were recorded via HDMI using the AVerMedia AVerTV CaptureHD TV tuner on another PC.

System software:

- Operating system: Windows 7 x64 SP1 Ultimate RUS;

- Operating system updates: all as of 03/08/2012, including Direct X;

- Driver for video card: NVIDIA GeForce 295.73;

- Driver for SATA controller: Intel RST 11.1, controller operates in RAID mode.

Testing methodology

Global settings:

- There is no antivirus installed in the OS that can affect the measurement results; Windows Defender is disabled.

- For the same reason, the file indexing service, update service, and scheduled defragmentation are disabled.

- Disabled Windows UAC, which made it impossible for some test programs to work.

- System Restore and hibernation are disabled - saving disk space.

- Superfetch disabled.

- Swap file – 1 GB.

- Power profile – high performance. Never disconnect disks.

- At the time of taking measurements, background monitoring programs such as Crystal Disk info, HWMonitor, perfmon counters and others are not used.

- The disk write cache is enabled unless otherwise specified (in the device manager, in the disk properties, on the “policy” tab, the “allow write caching for this device” checkbox is checked). "Enhanced performance" is not activated. TRIM is enabled (DisableDeleteNotify=0). Usually the disk is configured this way by default, but you still need to make sure.

- All drives were connected to a SATA-III port unless otherwise noted.

The set of test applications is as follows:

- Crystal Disk Mark 3.0 x64.

A popular test that allows you to measure disk speed in eight modes: reading and writing with sequential access, random mode in large blocks of 512 KB, small blocks of 4 KB and the same 4 KB requests with a disk queue length of 32 requests ( checking the efficiency of NCQ and load parallelization mechanisms). The default settings were used, namely, running incompressible data five times over a 1000 MB area. - PCMark 7 x64.

Latest version of the Futuremark test package. - Intel NAS Performance Toolkit 1.7.1.

NASPT is a very powerful test, comparable in functionality to IOMeter and designed primarily for testing network drives. It is also quite suitable for testing local disks. - FC-test 1.0 build 11.

The program worked on two NTFS partitions, representing all the space available for formatting, divided in half. Before each measurement, the computer was rebooted; the entire process was fully automated.The templates used as test sets were Install (414 files with a total volume of 575 MB), ISO (3 files with a total volume of 1600 MB) and Programs (8504 files with a total volume of 1380 MB). For each set, the speed of writing the entire set of files to disk (Create test), the speed of reading these files from disk (Read), the speed of copying files within one logical drive (Copy near) and the speed of copying to a second logical drive (Copy far) were measured. Aggressive Windows write caching distorts the results in the Create test, and the two methods of copying to an SSD are no different, so I will limit myself to publishing the two remaining results for each template.

- WinRAR 4.11 x64.

In this and all subsequent tests, the drives were system drives: a reference Windows image, including all the necessary programs and distributions, was loaded using Acronis True Image 12. The test file was a zipped Windows 7 folder. 83,000 files with a total volume of 15 GB were compressed in the standard way to 5.6 GB. The measurement showed that the packing speed of the disks has a minimal effect, so to save time, only unpacking into an adjacent folder was tested. - Microsoft Office 2010 Pro Plus.

The installation time was measured from a distribution kit, which is an ISO copy of the original DVD mounted in Daemon Tools. - Photoshop CS5.

Everyone's favorite graphic editor was installed from an ISO image connected using Daemon Tools. Both versions (x32 and x64) with an English interface were installed and installation time was measured. As a benchmark, we used a scheme from this specialized forum, namely this script, which creates an image of 18661 × 18661 pixels and performs several actions with it. The total execution time was measured without pauses between operations. In a good way, such things require a huge amount of RAM, so testing drives essentially comes down to checking the speed of working with a scratch file and the Windows swap file. Photoshop was allowed to occupy 90% of the memory, the rest of the settings remained at default. - Booting Windows 7.

Three periods of time were measured: the interval from the moment the power button was pressed until the Windows logo appeared, the time until the Windows desktop appeared, and the time until applications finished loading: Word 2010, Excel 2010, Acrobat Reader X and Photoshop CS5 were located in startup. opening the corresponding files. In addition, Daemon tools and Intel RST started in the background. The end of the download was considered to be the appearance of the photo in Photoshop; other applications were launched earlier. - Launching programs.

In the already loaded OS, a bat file was launched, which simultaneously launched the aforementioned Word, Excel, Acrobat Reader and Photoshop with their documents, as well as WinRAR, which opened a test archive with Windows. The longest operation is reading files in the archive and counting their number. - Crysis Warhead.

A popular shooter in the past, it was used to test installation and loading speed (from the moment you leave the desktop to the start of the 3D scene). It was previously revealed that this game has one of the strongest disk dependencies, so it is perfect as a benchmark for drives. The installation was carried out from the original DVD, unpacked onto the system disk as a set of folders. The launch was carried out through the Crysis Benchmark Tool 1.05 utility with the following settings: — Quality Settings: Very High; — Display resolution: 1280 x 1024; — Global settings: 64 bit, DirectX 10; — AntiAliasing: no AA; — Loops: 1; — Map: ambush flythrough; — Time of Day: 9. - World of Tanks.

Famous MMORPG. Games of this kind are highly dependent on network speed, so all measurements were carried out on weekday mornings on the WOT RU2 server, when there were 30-35 thousand people on it. Internet channel 100 Mbit, in-game ping 20-30 ms. The Himmelsdorf map was loading, position 1, training battle of 4-8 people, MS-1 tank. Resolution 1280 x 1024, anti-aliasing disabled, graphics quality is very high. - Lineage II.

Another famous and very disc-dependent MMORPG. The Russian official version of Goddess of Destruction: Chapter 1 Awakening and two replay data were used. The method for reproducing them was taken from the 4game.ru forum. The distribution installation time was measured, and the Fraps frametimes log was analyzed based on this technique. All settings, except screen resolution, are made in accordance with the recommendation: - Resolution: 1280 x 1024, 32bit, 60 Hz, full screen mode; — Textures, Detail, Animation, Effects: Low; — Terrain, Characters: Very wide; — PC/NPS limit: Max; - Weather, Antialiasing, Reflections, Graphics, Shadows, Ground Detail, Enhanced Effects: none.

⇡#Creating a RAID 0 array based on Intel RST

Intel has done a lot of work to make creating RAID arrays on platforms based on its processors a simple and transparent procedure. Today, the Intel RST driver completely protects users from the need to communicate with the BIOS of the RAID controller, and the only thing that needs to be done to be able to combine SSDs into arrays is to switch the SATA controller integrated into the system logic set into RAID mode through the motherboard BIOS fees.

True, there may be trouble with the operating system, which, after changing the SATA controller mode, will refuse to boot and will crash into a “blue screen”. The reason is that if the RAID controller was not enabled when installing the operating system, the necessary driver is deactivated in the OS kernel. But in Windows 8 and 8.1, Microsoft has provided a fairly simple procedure for solving the problem without the need for a new reinstallation of the operating system, performed through “safe mode”. Before changing the SATA controller mode (if the system no longer starts, but the SATA controller settings in the BIOS should be returned to the original ones), you must open a command prompt with administrator rights and run the command bcdedit /set {current} safeboot minimal. This will program the OS to start in safe mode, and the next time you reboot, you can easily change the SATA controller mode in the BIOS. When the system boots into safe mode after activating RAID, the boot type should be returned to normal by running the command bcdedit /deletevalue {current} safeboot on the command line. You should no longer encounter a blue screen at startup.

Owners of Windows 7 will have to tinker more seriously before changing the controller mode; in this case, they cannot do without editing the registry. Detailed information on solving this problem is available on the Microsoft website.

After enabling RAID mode and installing the necessary drivers into the system, you can proceed directly to forming the array. It is created using the Intel RST driver.

When creating an array, you first need to specify its type. In our case it is RAID 0.

Second step: you need to select the drives that need to be included in the array.

If desired, you can also change the size of the blocks into which the recorded information is divided for its distribution across the SSD in interleaved mode. However, the default value of 16 KB is quite suitable for RAID 0 arrays of SSDs with very low access times, so in general there is no point in changing it.

And that’s it – the array is ready.

Please note that despite the fact that the two Kingston HyperX 3K SSDs are combined in RAID 0, there are no problems with their SMART diagnostics.

HDD RAID versus one SSD

We think the most common reason someone might wonder about RAID and its relationship to SSDs comes from this particular comparison. So first we'll get this out of the way.

Mechanical hard drives are quite slow, so one popular way to improve performance is to combine two identical drives in a RAID 0 configuration. Data is "striped" across both drives, and they act like a single hard drive, but with (theoretically) twice the transfer speed. Because each drive has a unique portion of your data, you can always have both drives involved in any operation.

Unfortunately, when it comes to pure speed, a single SSD will always beat a RAID 0 hard drive setup. Even the fastest and most expensive 10,000 RPM SATA III consumer hard drive only tops out at 200 MB/s. In theory . Thus, two of them in RAID0 will cost a little less than half.

Almost any SATA III SSD will be very close to the connection limit at 600 MB/s. If we are talking about NVME SSM drives using the PCIe protocol, then the typical read speed exceeds 2000 MB/s.

In other words, if you need pure performance, a single SSD will always be better than a pair of mechanical drives. Even if they are the fastest mechanical drives in the world.

The same goes for reliability and data protection. If you have a RAID 10 setup with four hard drives, you still get twice the speed of the drive, and you can lose a drive without losing any data. Despite this, a single SSD will still be a more reliable solution. SSDs have a limited number of writes before they can no longer overwrite existing data, but you can still read all the data on the drive.

Spontaneous SSD failure is incredibly rare, but you always have the option of running two SSDs in RAID 1. There is no significant speed gain, but one drive can fail completely without losing data. We do not recommend spending money on a RAID 1 SSD setup solely for data security. It is much more cost-effective to simply back up the hard drive image to an available external drive or to the cloud, since most desktop systems are not mission-critical.

⇡#Testing methodology

Testing is carried out in the Windows 8.1 operating system, which correctly recognizes and services modern solid-state drives. This means that during the testing process, as in normal everyday use of the SSD, the TRIM command is supported and actively used. Performance measurements are performed with drives in a “used” state, which is achieved by pre-filling them with data. Before each test, the drives are cleaned and maintained using the TRIM command. There is a 15-minute pause between individual tests, allotted for the correct development of garbage collection technology. All tests use randomized, incompressible data unless otherwise noted.

Applications and tests used:

- Iometer 1.1.0 RC1

- Measuring the speed of sequential reading and writing data in blocks of 256 KB (the most typical block size for sequential operations in desktop tasks). The speed assessment is performed within a minute, after which the average is calculated.

- Measuring the speed of random reading and writing in 4 KB blocks (this block size is used in the vast majority of real-life operations). The test is carried out twice - without a request queue and with a request queue with a depth of 4 commands (typical for desktop applications that actively work with a branched file system). Data blocks are aligned relative to the flash memory pages of the drives. The speed assessment is performed for three minutes, after which the average is calculated.

- Establishing the dependence of random read and write speeds when operating a drive with 4 KB blocks on the depth of the request queue (ranging from one to 32 commands). Data blocks are aligned relative to the flash memory pages of the drives. The speed assessment is performed for three minutes, after which the average is calculated.

- Establishing the dependence of random read and write speeds when the drive operates with blocks of different sizes. Blocks ranging in size from 512 bytes to 256 KB are used. The request queue depth during the test is 4 commands. Data blocks are aligned relative to the flash memory pages of the drives. The speed assessment is performed for three minutes, after which the average is calculated.

- Measuring performance under mixed multi-threaded workloads. A variety of commands are sent to the drive, including both reading and writing with different block sizes. The percentage ratio between heterogeneous requests is close to the real desktop load (75% - read operations, 25% - write; 75% - random requests, 25% - sequential; 55% - 4 KB blocks, 25% - 64 KB and 20% - 128 KB). Test requests are generated by four parallel threads. Data blocks are aligned relative to the flash memory pages of the drives. The speed assessment is carried out within three minutes, after which the average is calculated.

- CrystalDiskMark 3.0.3

A synthetic test that provides typical performance indicators for solid-state drives, measured on a 1-gigabyte disk area “on top” of the file system. Of the entire set of parameters that can be assessed using this utility, we pay attention to the speed of sequential read and write, as well as the performance of random read and write of 4 KB blocks without a request queue and with a queue depth of 32 commands.

- PCMark 8 2.0

A test based on emulating real disk load, which is typical for various popular applications. On the drive being tested, a single partition is created in the NTFS file system for the entire available volume, and the Secondary Storage test is carried out in PCMark 8. The test results take into account both the final performance and the execution speed of individual test traces generated by various applications.

Features of SSD caching in RAIDIX

RAIDIX implements a parallel SSD cache that has two unique features: dividing incoming requests into RRC (Random Read Cache) and RWC (Random Write Cache) categories and using Log-structured recording for its own eviction algorithms.

RRC and RWC categories

The cache space is divided into two functional categories: for random read requests - RRC, for random write requests - RWC. Each of these categories has its own entry and displacement rules.

A special detector is responsible for the hit, which qualifies incoming requests.

Figure 4. SSD cache operation diagram in RAIDIX

Hitting the RRC

Only random requests with a frequency of more than 2 (ghost queue) fall into the RRC area.

Getting into RWC

The RWC area includes all random write requests whose block size is less than the set parameter (32KB by default).

Features of Log-structured recording

Log-structured recording is a method of sequentially recording blocks of data without taking into account their logical addressing (LBA, Logical Block Addressing).

Figure 5. Visualization of the Log-structured recording principle

In RAIDIX, Log-structured recording is used to fill allocated areas (set to 1 GB in size) within the RRC and RWC. These regions are used for additional ranking when cache space is overwritten.

Evicting from RRC buffer

The coldest RRC area is selected, and new data from the ghost queue (data with a request frequency of more than 2) is overwritten into it.

RWC buffer evictions

The area is selected using the FIFO principle, and then data blocks are evicted from it sequentially, in accordance with LBA (Logical Block Address).

⇡#Test bench

The test platform is a computer with a Gigabyte GA-Z87X-UD3H motherboard, a Core i3-4340 processor and 4 GB of DDR3-1600 MHz RAM. The drives connect to a SATA 6 Gb/s controller built into the motherboard chipset and operate in AHCI or RAID mode. The driver used is Intel Rapid Storage Technology (RST) 12.9.0.1001 and the operating system is Windows 8.1 Enterprise x64.

The volume and data transfer rate in benchmarks are indicated in binary units (1 KB = 1024 bytes).

Chasing speed records

Setting up a RAID array To be able to boot from a RAID array, you need to configure it at the hardware level in UEFI.

A RAID 0 array of two drives works faster than an NVMe SSD, when the system writes and reads information simultaneously from two disks. If you configure your hardware RAID arrays in the BIOS/UEFI and go through the Windows setup, you can get the same data transfer speeds as an entry-level NVMe drive on the two available SATA drives. We want to combine two high-speed NVMe drives in this way and break speed records.

Creating a RAID array

For Windows, Intel RAID drivers and proprietary Intel Rapid Storage software must be installed.

The first barrier in the pursuit of a RAID array of NVMe drives is hardware. The motherboard should have two NVMe slots, as well as the ability to combine them using the RAID function of the Intel chipset. In addition, the system should also boot after this procedure. In principle, top-end motherboards with Intel Z170 chipsets and the latest Z270 (for Kaby Lake processors) can cope with this task.

We installed two Samsung 960 Pro SSDs on the Gigabyte Z270X Gaming 7 motherboard. Next we needed to configure hardware RAID in UEFI. In an early version of the motherboard firmware, I also had to perform a small task along the way: I had to first activate the RAID mode of the SATA controller, and only then in the menu item “Peripheral | EZ Raid" we were able to combine both NVMe drives into a RAID 0 array, which received twice the capacity of a single drive.

The RAID array was ready in a few clicks. To install Windows 10, we copied the Intel Rapid Storage program from the disk included with the motherboard to a USB flash drive. When we needed to select a system drive during installation, we loaded the driver by clicking the appropriate button, after which the array associated with the Intel controller was identified as the destination drive.

During the UEFI installation process we launched, the system automatically boots from a RAID array, which is used even in the current operating mode as a regular drive. But because the operating system now only communicates with the Intel RAID controller and not directly with the drives, we weren't able to use Samsung's NVMe driver to allow the 960 Pro's full potential to shine, and that was a bit of a drag. speed.

RAID 0: benefits and benchmarks

With the correct UEFI settings, our test system boots in less than ten seconds. The complete installation of LibreOffice, along with writing 7000 files, took 21 seconds. The benchmarks (see above) reflect in numbers the performance of the RAID array, as well as its limit. The limit is because instead of a theoretical 100% increase in speed compared to a separate disk, we only got a 20% increase in read speed and 32% in write speed.

We were able to achieve greater speed by using a method that was rather useless in practice: using an adapter, we connected a second solid-state drive to the PCIe slot for video cards, then booted from the third SATA SSD and combined both NVMe media with Samsung drivers in Windows into one software

M.2/PCIe adapter If necessary, an M.2 form factor solid-state drive can be connected to a PCIe x4 slot via a

RAID array adapter. Such an array (however, it is not suitable for use as a disk for booting a system) outperformed a separate drive by 43% in reading and as much as 82% in writing.

The results of a simple but fairly fast ATTO Disk Benchmark test showed that the speed of even this combination will not exceed 4 GB/s. This is the maximum bandwidth of the DMI bus connecting the processor and the chipset. Intel must make urgent decisions to redesign the platform so that it can support the enormous data transfer speeds of NVMe-compatible drives.

⇡#Sequential read and write operations, IOMeter

Sequential disk operations are where the scalability of RAID performance can best be seen. The striped array turns out to be significantly faster than single Kingston HyperX 3K 240 and 480 GB in both sequential reading and writing.

RAID 1 of 2 hard drives

RAID 1 is one of the most common and budget options that uses 2 hard drives. This is the minimum number of HDDs or SSDs that can be used. RAID 1 is designed to provide maximum protection for user data, because all files will be simultaneously copied to 2 hard drives. In order to create it, we take two hard drives of equal size, for example 500 GB each, and make the appropriate settings in the BIOS to create the array. After this, your system will see one hard drive measuring not 1 TB, but 500 GB, although physically two hard drives work - the calculation formula is given below. And all files will be simultaneously written to two disks, that is, the second will be a full backup copy of the first. As you understand, if one of the disks fails, you will not lose a single piece of your information, since you will have a second copy of this disk.

Also, the failure will not be noticed by the operating system, which will continue to work with the second disk - only a special program that monitors the functioning of the array will notify you of the problem. You just need to remove the faulty disk and connect the same one, only a working one - the system will automatically copy all the data from the remaining working disk to it and continue working.

The disk volume that the system will see is calculated here using the formula:

V = 1 x Vmin, where V is the total capacity and Vmin is the storage capacity of the smallest hard drive.

⇡#Random read and write operations, IOMeter

But during random reading, the same impressive increase in speed as in the case of sequential operations is not visible. From the results shown in the diagrams, we can conclude that a RAID 0 array is effective only when a queue is formed from random operations.

Here we should start with the fact that when measuring random write speed, the Kingston HyperX 3K with a capacity of 480 GB shows extremely low results. This strange feature of this drive is due to the inability of the old second generation SandForce controller to create large-capacity SSDs. This is why RAID 0 arrays of small SSDs can have significantly higher speeds than single flash drives of the same capacity. Meanwhile, compared to a single Kingston HyperX 3K 240 GB, an array made up of such flash drives is by no means faster. However, you shouldn’t be particularly upset about this: this situation occurs only with accidental recording.

Let's now take a look at how RAID 0 performance when working with 4K blocks depends on the depth of the request queue.

The above graphs serve as another illustration of what was said above. If RAID 0 demonstrates higher speed in reading than single solid-state drives, and the advantage increases with increasing queue depth, then in writing operations RAID 0 from Kingston HyperX 3K 240 GB is only ahead of Kingston HyperX 3K 480 GB. The Kingston HyperX 3K 240 GB alone turns out to be better than the array.

The next pair of graphs shows the dependence of the performance of random operations on the size of the data block.

In fact, as it turns out, a RAID 0 array is inferior in write speed to the single drives included in it only when operations occur in 4-kilobyte blocks. This is not surprising. As the graph shows, the Kingston HyperX 3K 240 GB is optimized for 4 KB requests, but the RAID controller, in accordance with the stripe block size we select, converts them into 16 KB requests. Unfortunately, using interleaving 4-KB blocks in an array is far from the most winning strategy. In this case, the load on the central processor created by the RAID controller seriously increases, and there may not be any real increase in speed.

To conclude the review of IOmeter results, we suggest taking a look at the performance of drives in a synthetic simulation of heavy mixed disk activity, in which different types of operations are reproduced simultaneously and in several threads.

A RAID 0 array from a pair of Kingston HyperX 3K 240 GB shows slightly higher speeds than a simple Kingston HyperX 3K 240 GB drive. However, the Kingston HyperX 3K 480 GB is even better suited to mixed loads - its results are higher. However, the difference between the tested configurations in this benchmark is not fundamental.

RAID 10 based on Kingston SSD and Broadcom controllers

So, RAID 0 gives us a twofold increase in speed and access time, and RAID 1 provides reliability. Ideally, they would be combined, and this is where RAID 10 (or 1+0) comes to the rescue. “Ten” is assembled from four SATA SSD or NVMe drives (maximum 32) and implies an array of “mirrors”, the number of drives in which should always be a multiple of four. Data in this array is written by partitioning into fixed blocks (as is the case with RAID 0) and striping between drives, distributing copies among the “drives” in the RAID 1 array. And thanks to the ability to simultaneously access multiple groups of disks, RAID 10 shows high performance.

Because RAID 10 is capable of distributing data across multiple mirrored pairs, this means that it can tolerate failure of one drive in the pair. However, if both mirror pairs (that is, all four drives) fail, data loss will inevitably occur. As a result, we also get good fault tolerance and reliability. But it is worth keeping in mind that, like RAID 1, the tenth level array uses only half of the total capacity, and therefore is an expensive solution. And also difficult to set up.

RAID 10 is suitable for use with data stores that require 100 percent redundancy of mirrored disk groups, as well as the improved I/O performance of RAID 0. It is the best solution for mid-sized databases or any environment that requires greater fault tolerance than RAID 5.

⇡#Results in CrystalDiskMark

CrystalDiskMark is a popular, simple benchmark application that runs on top of the file system and produces results that are easily repeatable by regular users. And what this benchmark produces is somewhat different from the indicators that we obtained in the heavy and multifunctional IOmeter package, although from a qualitative point of view there are no fundamental differences. Striped RAID performance scales well in terms of sequential operations. There are no complaints about the operation of RAID 0 from Kingston HyperX 3K 240 GB during random reading. In this case, the speed increase compared to single SSDs depends on the depth of the request queue, and when its length reaches a large value, RAID 0 is capable of delivering significantly higher speeds. With random recording, the picture is somewhat different. RAID 0 loses to one Kingston HyperX 3K 240 GB in cases where operations are not buffered, but increasing the depth of the request queue expectedly returns the advantage of the two-disk configuration.

In addition, CrystalDiskMark again reveals performance issues with the high-capacity Kingston HyperX 3K 480GB random write model, further highlighting the benefits of RAID 0 when large disk configurations need to be created.

SSD caching capabilities in RAIDIX

The parallel architecture of the SSD cache in RAIDIX allows it to be more than just a buffer for accumulating random requests - it begins to act as a “smart load distributor” on the disk subsystem. Thanks to query sorting and special preemption algorithms, random load peaks are smoothed out faster and with less impact on overall system performance.

Eviction algorithms use log-structured recording to more efficiently replace data in the cache. Thanks to this, the number of accesses to flash drives is reduced and their wear is significantly reduced.

Reduce SSD wear and tear

Thanks to the load detector and rewriting algorithms, the total number of write hits per array of SSD drives in RAIDIX is 1.8. Under similar conditions, the performance of the second level cache with the LRU algorithm is 10.8. This means that the number of required rewrites to flash drives in the implemented approach will be 6 times less than in many traditional storage systems. Accordingly, the SSD cache in RAIDIX will use the resource of solid-state drives much more efficiently, increasing their lifespan by approximately 6 times.

Efficiency of SSD caching under various loads

A mixed load can be thought of as a chronological list of conditions with a sequential or random request type. The storage system has to cope with each of these states, even if it is not its preferred or convenient state.

We tested the SSD cache, emulating various operating situations with different types of loads. By comparing the results obtained with the values of a system without an SSD cache, you can clearly evaluate the performance gain for different types of queries.

System configuration: SSD cache: RAID 10, 4 SAS SSD, capacity 372 GB Main storage: RAID 6i, 13 HDD, capacity 3072 GB

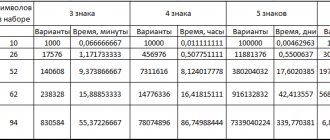

| Pattern type | Request type | Value with SSD caching | Meaning without SSD caching | Increase in productivity |

| Random read (100% cache hit) | random read 100% | 85.5K IOps | 2.5K IOps | 34 times |

| Random write (100% cache hit) | random write 100% | 23K IOps | 500 IOps | 46 times |

| Random read (80% cache hit, 20% HDD hit) | random read 100% | 16.5K IOps | 2.5K IOps | 6.5 times |

| Random read from cache, write to HDD | random read 50% | 40K IOps | 180 IOps | 222 times |

| sequential write 50% | 870 Mbps | 411 Mbps | 2 times | |

| Random read and write (100% cache hit) | random read 50% | 30K IOps | 224 IOps | 133 times |

| random write 50% | 19K IOps | 800 IOps | 23 times | |

| Consecutive requests with a large block, 100% of the random load hits the SSD cache | random read 25% | 2438 IOps | 56 IOps | 43 times |

| random write 25% | 1918 IOps | 82 IOps | 24 times | |

| sequential read 25% | 668 Mbps | 120 Mbps | 5.5 times | |

| sequential write 25% | 382 Mbps | 76.7 Mbps | 5 times |

Each real-world situation will have its own unique load pattern, and such a fragmented view does not provide a clear answer about the effectiveness of SSD caching in practice. But it helps to navigate where this technology can be most useful.

⇡#PCMark 8 2.0, real use cases

The Futuremark PCMark 8 2.0 test package is interesting because it is not of a synthetic nature, but, on the contrary, is based on how real applications work. During its passage, real scenarios-traces of using the disk in common desktop tasks are reproduced and the speed of their execution is measured. The current version of this test simulates workloads that are taken from real-life gaming applications of Battlefield 3 and World of Warcraft and software packages from Abobe and Microsoft: After Effects, Illustrator, InDesign, Photoshop, Excel, PowerPoint and Word. The final result is calculated in the form of the average speed that the drives show when passing test routes.

In the PCMark 8 test, which simulates performance in real-world applications, the RAID 0 array shows approximately 20-25 percent higher performance than single flash drives. Apparently, those enthusiasts who are interested in the subject of this study should expect approximately this improvement in speed.

The PCMark 8 integral indicator should also be supplemented with performance indicators produced by flash drives when passing through individual test traces that simulate various real-life load cases.

Despite the fact that in synthetic tests we came across situations in which the RAID 0 array turned out to be slower than the single drives included in it, in real life such situations most likely will not arise. At the very least, PCMark 8 clearly indicates that RAID 0 is faster in any of the popular applications. The advantage of an array of a pair of Kingston HyperX 3K 240 GB over one such drive ranges from 3 to 33 percent. And compared to the more capacious modification Kingston HyperX 3K 480 GB, the RAID array under study outperforms even more.

Why did this happen in general?

Well, judge for yourself - processors acquire cores, frequencies, cache and architecture; video cards - the number of pixel pipelines, the amount and capacity of memory, shader units, video processor frequencies, and in some places even the number of these processors; RAM - frequencies and timings.

Hard drives are only growing in volume because the head rotation speed of them (with the exception of rare models like Raptors) has been frozen for quite some time at around 7200, the cache is not exactly growing either, the architecture remains almost the same.

In general, in terms of performance, disks are stagnant (the situation can only be saved by developing SSDs), but they play a significant role in the operation of the system and, in some places, full-fledged applications.

In the case of building a single (in the sense of number 1) raid, you will lose a little in performance, but you will receive some tangible guarantee of the security of your data, because it will be completely duplicated and, in fact, even if one disk fails, the whole thing and will be completely on the second without any losses.

In general, I repeat, raids will be useful to everyone. I would even say that they are required

⇡#Conclusions

So, testing a RAID 0 array made up of solid-state drives shows that such a configuration has the right to life. Of course, this does not eliminate the traditional disadvantages of disk arrays, but the developers of integrated RAID controllers and drivers have done a lot of work and have ensured that many of the problems of such configurations are a thing of the past. In general, creating a RAID 0 array is one of the traditional ways to increase the performance of the disk subsystem. This technique also works quite well for SSDs; combining a pair of disks into an array really allows you to increase both linear speeds and the performance of operations on small blocks with a deep request queue. Thus, during the tests, we were able to obtain truly impressive sequential read and write performance indicators for the array, significantly exceeding the throughput of the SATA 6 Gb/s interface. At the same time, solid-state drives of maximum capacity, as we saw in tests, do not always have the leading level of performance. Therefore, RAID 0 configurations can also be in demand in situations where the task is to create a large-capacity disk subsystem.

I must say that previously we were somewhat wary of RAID from SSDs, since RAID controllers blocked the use of the TRIM command and also did not allow us to monitor the status of the drives included in the array. However, at the moment all this is a thing of the past, at least for controllers built into Intel chipsets. Today, RAID 0 normally supports TRIM, and the driver allows you to freely monitor the SMART parameters of the SSDs included in the array.

As for the Kingston HyperX 3K drives that participated in our testing, their 240 GB modifications proved to be quite a worthy choice for creating RAID arrays. Kingston switched them to Toshiba's newer 19nm memory, and the new hardware design allowed for slightly improved performance without causing any unpleasant effects.

Even though drives based on SandForce controllers may not seem like the latest solution, they are very suitable for RAID arrays. On the one hand, these SSDs are comprehensively tested and very reliable, and on the other hand, they have a very tempting price. As for performance, a RAID 0 disk array made up of two SandForce drives will undoubtedly outperform any single-disk configuration. If only because the speed of its sequential operations is not limited by the bandwidth of the SATA 6 Gb/s interface.

When is SSD cache useful?

SSD cache is suitable for situations where the storage system receives not only a sequential load, but also a certain percentage of random requests. At the same time, the efficiency of SSD caching will be significantly higher in situations where random requests are characterized by spatial locality, that is, an area of “hot” data is formed in a certain address space.

SSD caching technology will be especially useful, for example, when working with streams in video surveillance. This pattern is dominated by sequential workloads, but random read and write requests may also occur. If you do not handle such peaks using SSD caching, the system will try to cope with them using the HDD array, therefore, performance will decrease significantly.

Figure 1. Uneven time interval with unpredictable hit rate

In practice, the appearance of random requests among a uniform sequential load is not at all uncommon. This can happen when several different applications are running simultaneously on the server. For example, one has a set priority and works with sequential requests, while others access data from time to time (including repeatedly) in a random order. Another example of random requests can be the so-called I/O Blender Effect, which shuffles sequential requests.

If the storage system receives a load with a high frequency of random and few repeating requests, then the efficiency of the SSD cache will decrease.

Figure 2. Uniform time interval with predictable hit rate

With a large number of such requests, the space of SSD drives will quickly fill up, and system performance will tend to the speed of operation on HDD drives.

It should be remembered that the SSD cache is a rather situational tool that will not show its productivity in all cases. In general terms, its use will be useful under the following load characteristics:

- random read or write requests have low intensity and uneven time interval;

- the number of I/O operations for reading is significantly greater than for writing;

- the amount of “hot” data will presumably be less than the size of the SSD workspace.