Hello, %username%!

It is unlikely that anyone will be surprised by the fact that games today are produced in an assembly line manner, and a gaming PC is simply associated with a heap of expensive hardware. There is no desire to throw money away during a crisis, but you still want to play! Today we will find out for which games a powerful processor is more important, and which, on the contrary, focus on the power of the video card. And at the same time, we’ll define a portrait of the optimal gaming PC at the turn of 2016-2017.

We live in amazing times, comrades! On the one hand, computers have not been developing at an explosive pace for a long time - with the evolution of processors in recent years, there has been a complete disgrace, and a considerable part of video cards simply change nameplates from year to year until a new technical process arrives. But as soon as you start talking about optimizing software or games and considering specifics, the public will immediately say “you, my friend, stop catching fleas and finally buy yourself some normal hardware.” And if you don’t have money for normal hardware, buy a console that doesn’t need to select components.

And they are right - there is no need to select the filling in the consoles. But depending on the chosen system, the selection of games—exclusives, as they say now—will differ. But what if you approach the PC configuration in the same way and choose hardware based on the processor or graphics requirements of a particular game? Will games on the same engine run equally well on a fixed configuration? Today we will try to understand these issues.

“It’s too late to drink Borjomi” - which computers should no longer be gaming

We'll have to hit the "how fast time flies" syndrome again, but let's stick to some conventions. For some, a PC capable of running games in flash player is also a kind of gaming PC, but by gaming computer we mean a machine with:

- Full HD monitor

- Capable of delivering over 30 fps in single player and over 50 fps in multiplayer

- Suitable for high or maximum detail settings in modern games

And modern games are at least titles released in 2013 and later. The fact that in our minds the year 2007-2010 was quite recently is solely our illusion. Because it’s scary to think that the children who were born in 2010 have already grown up and become a new caste of gamers. This means that it is better not to use nostalgia and excuses “the games were better back then” in discussions about modern gaming machines.”

Forever young-oh-oh, forever drunk!

From these theses comes a certain threshold of minimally acceptable hardware for games - a dual-core chip with Hyper Threading (maximum 4 years old) or junior quad-cores when it comes to processors, as well as three- to four-year-old video cards at a level higher than the middle-end. Less productive hardware has moved from the gaming category to “it’ll do for me anyway, I’m not into graphics, but gameplay!” or for free-to-play games in which pleasingness is more important than visual technology.

Console emulators - need more CPU

For high performance games in PC console emulators, you will need a fast CPU, because the simulation of the latest consoles most often occurs in a slow interpretation mode. This is how, for example, the only viable Sony PlayStation 3 emulator works. PlayStation 3 emulator (Rpcs3) on Intel Core i7-4790K, NVIDIA GeForce GTX 970, 16 Gb RAM Kingston DDR3

In emulators of relatively middle-aged consoles, with the advent of Direct3D plug-ins, it becomes possible to calculate the graphics component on a video card, although the requirements for a video accelerator still remain ridiculous by modern standards - in order to process (and even improve with the help of anti-aliasing) images from consoles of the early 2000s, even an old middle-class video card - AMD Radeon HD 7850, for example, will be enough .

Shooters

We take into account games released in 2013 and younger, because from the heights of December 2016, this period seems to be the golden mean for a novice gamer - games run well on modern hardware, but do not yet look like an artifact of the distant past. However, already in those delightful pre-crisis times there were titles that could squeeze all the juice out of computer hardware.

Metro: Last Light

The younger brother of Metro 2033, the son of former developers of the STALKER games, a post-apocalypse simulator in our latitudes and just a very gluttonous game. The proprietary 4A engine was born from a completely redesigned “Stalker” X-Ray. Tessellation, lots of destructible objects, and high detail made this game truly demanding on computers in 2013. And one of the most merciless to the CPU - Metro will “eat” as many cores and gigahertz as it is given.

Metro: Last Light (2013)

The publishing house Deep Silver plans to release the next game in the series “after 2020,” but it can already be assumed that the developers’ family values will be preserved and Metro will continue to be an extremely difficult discipline for processors. In modern realities, for a comfortable game at maximum detail, you will need at least a high-frequency Intel Core i5 processor and a GeForce GTX 680/770 or Radeon R9 280X/380/380X video card. Not bad, but it's hardly a compliment.

General principle:

Both the processor and the video card are equally important.

Battlefield 4/Battlefield 1

If previously the Battlefield game series did not use the Frostbite Engine, now the “all Electronic Arts” game engine is associated specifically with the action game produced by DICE.

When the sensational Battlefield 3 was released in 2011, a live benchmark with good gameplay, the idea was firmly established among the people that “battle is a power-hungry game for top-end computers.” But since then Frostbite has been updated from the second version to the third, and the hardware has stepped far forward. Therefore, the new Battlefield about the First World War pleased “both yours and ours” - at ultra-detailed levels the game produces over 30 fps even when paired with GeForce GTX 950/Radeon RX 460 video cards, which are unsuitable for most modern games. Battlefield 4 is just as loyal to the video accelerator , but at the same time it is also “playable” on dual-core processors. With the “first” battlefield, such tricks work out worse.

Battlefield 1 (2016)

But the game consumes tons of RAM - at maximum detail it alone needs 8 gigabytes. So it makes sense to grab HyperX DDR3/DDR4 modules for your gaming computers, so as not to feel the limit beyond which “lags” begin in the game.

General principle:

The processor is more important than the video card. In DirectX 12, even very old video accelerators provide an acceptable fps level.

What changed?

The new part of Battlefield loads the processor more than its predecessor, consumes more video memory and RAM, but is still slightly better optimized for weak GPUs.

Processor performance. Overclocking the processor, upgrading the system by replacing the processor.

Max Ursul (DigiMakc)11.05.2016

Table of contents

- Introduction Theoretical part • Basic parameters of the heart of the computer - the processor • Processor performance • How to increase system performance?

- Practical part • Test bench configuration • About the processor. LGA771-775-mod • Test applications and testing methodology • Test results • Generalization of results. conclusions

Introduction

- What makes up system performance and what should you pay attention to?

- What is overclocking and what does it give? What is the benefit of overclocking and what is the harm?

- Upgrade. A simple way to increase productivity.

We will consider these and many other questions in this article.

Theoretical part

Let's look at the example of a system that was 8 years old. This will be, perhaps, the most popular and long-lived system based on the Core 2 architecture, LGA775 connector.

Basic parameters of the heart of the computer - the processor

You can often find a system description such as Intel C2D E5300 S775 2×2.6 GHz / FSB 800 MHz / 2 MB L2

.

Surely the main thing from this is clear, but if anyone has forgotten, let me remind you: this is an Intel

Core 2

architecture (not to be confused with core i, since this is a different architecture);

D - Duo

- 2-core;

E5300

- model,

LGA775

- socket in which the processor is installed (cannot be inserted into another);

2x2.6 GHz

- two 2.6 GHz cores;

FSB 800 MHz

is the processor system bus (Front Side Bus) and its frequency; through the bus, the processor exchanges information with the chipset (north bridge), which, in turn, connects RAM, video card and other devices with it.

It is important to note that for processors based on architectures following Core 2, the memory controller and PCI-E bus controller were moved directly into the processor; The 2 MB L2

cache is a L2 cache located in the processor - the fastest "RAM" memory in a computer (after the L1 cache), designed to store frequently used data.

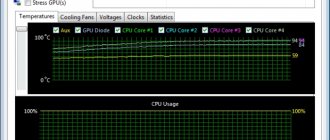

CPU performance

What makes up the performance of a particular processor? Of course, the main thing is the processor architecture, that is, the speed of execution of various instructions per clock cycle, the presence of the instructions themselves, the bandwidth and size of the cache memory, and so on. But we can’t do anything about it - the processor is what it is. We can either use it if it suits our requirements or not.

Processor frequency (MHz)

- one of the main parameters on which processor performance largely depends.

Roughly speaking, this is the number of cycles per second, during one or more of which the processor performs certain computational actions. Accordingly, the processor performs several billion of these actions per second. The frequency is set by the manufacturer at the factory, and it depends on two characteristics: FSB (bus frequency) and a certain multiplier. So, for example, the above-mentioned processor has a bus frequency of 800 MHz, and the multiplier is 13. Someone will immediately notice, “Well, 800 x 13 = 10400 MHz, not 2600!”, They will notice correctly, but here, too, not everything is simple... although not , Just! The processor bus frequency is actually not 800 MHz, but 200 MHz!

200 x 13 = 2600 MHz , converges! You say, “why is that so?” In fact, for each clock cycle, not 1 piece of data is transmitted on the bus, but as many as 4! Thus, with a 200 MHz bus, 4 times more data is transferred, and the manufacturer conventionally called it 800 MHz. I agree, this is wrong, but what can you do, such is the nature of marketing.

But processor frequency is not the most important thing. So, for example, with a 333 MHz bus (1333 effective, 333 x 4) and a multiplier of 8, the processor will receive a frequency of 2.66 GHz, which is essentially almost the same as 2.6 GHz (200 x 13), but at This will increase the speed of exchange with other devices by 66%, which is quite decent, right? This is especially important for exchange with RAM (more on this below).

But that's not all. There is also a second level cache (L2)

. “Why the second, but what about the first?” - You say. And the first one is also important, but its volume is small, so it is usually not considered in comparisons. But the second level cache differs significantly. So between the 2-core Core2 architecture, the difference in this parameter is 6 times! 1 MB for younger models, and 6 MB for older ones. “But 6 MB is ridiculously small!”, you say. And you'll be right. But here, as usual, there are pitfalls. Cache memory is located in the processor, it takes up a significant portion of the processor die area, which makes it expensive to manufacture and also significantly increases the power consumption of the processor. However, the cache impact on performance is very relative. On some tasks it is more or less significant (for example, +30%), on others it has almost no effect at all. On average 5-10%.

Well, one more of the most important parameters is the number of cores

. Productivity largely depends on their number. What exactly is the number of cores, and why do you need more than one?

Roughly speaking, these are two 1-core processors combined into one. What these cores have in common are a L2 cache, a multiplier, and a bus. It is important to consider and remember that 2 cores at 2.6 GHz will not give a processor at 5.2 GHz. That is, when some companies that sell hardware (or sellers) write: “processor 2x2.6 GHz = 5.2 GHz,” or something like that, then this, to put it mildly, is very wrong (marketing trick) . But why? But because even today, in the “era of multi-core processors,” there are many programs that use multi-cores ineffectively. That is, a program running on a computer cannot use all the processor cores, which makes a multi-core processor ineffective. One of the striking examples is the most popular game that has won millions of fans - World of Tanks. The developers of this game in 2012 had a profit of 6.1 million euros, while since 2010 and until recently they could not or did not want (for some reason) to make a normal game engine that would effectively use multi-core processors. As a result, this game worked about the same on 2-core systems as on multi-core systems (3-6 or more cores). And this is far from the only example. And therefore (if we do not consider the difference in architecture), for example, the top “8-core” AMD FX-8350 processor in many tasks works like a junior 4-core Intel Core i5 or even a “budget” i3. That is, the “modular” architecture of the FX-8350 processor is quite dubious, but it has “8 cores”, and if they could be used effectively, then this processor would be a serious competitor to 4-core Intel Core i5/i7 processors.

A little about AMD FX and “modules”. One module in AMD processors based on the Buldozer architecture (and subsequent ones) is a kind of hybrid between a 1- and 2-core design, but is called “2-core”. One module, in addition to the common L2 cache memory for both cores, has several more “auxiliary” and computational units common to the two cores, which before the Buldozer architecture each had its own core. This was done in order to increase the number of cores, while at the same time saving the transistor budget and minimizing power consumption. So the AMD FX-8150 processor has “8 cores” - 4 modules, 2 cores per module, with a common L3 (third level) cache of 8 MB for all 4 modules. However, the cores' sharing of common module resources led to a drop in per-core performance, which they tried to compensate for by increasing the frequency. But when working with real applications, this turned out to be not enough, and therefore AMD FX processors in “low-threaded” applications are significantly inferior to Intel processors of the same year with a similar frequency.

But why then many cores? Multi-core processors perform well in applications optimized for multi-core processors, thereby speeding up the application and for multitasking. Multi-threaded applications include high-quality games, applications for processing/converting audio and video, 3D modeling and rendering (converting a model into a photorealistic image), modern web browsers (opening each tab in a new process), and others. An example of multitasking is when multiple programs are used and executed at the same time. They may or may not be optimized for multithreading. But working on a computer with a multi-core processor is more comfortable, since it responds faster to requests and slows down less when running several programs. Provided that the processor frequency is approximately the same.

Weakness

Almost any computer is the speed of working with memory. And for processors of the Core 2 architecture (2005-2009), in addition to the fact that the architecture is outdated, this is a very pressing problem, which is essentially a bottleneck of the system.

What exactly is this problem and why? When the processor performs some work, it accesses memory for data. One of the first is cache memory, fast, everything is fine here, but it is not enough, then the processor accesses the RAM through the bus. And this, in fact, is the problem. Or rather, there are even several of them:

- The processor bus speed is insufficient.

So, for example, with an 800 MHz bus we get: 800 MHz x 64 bits (bus width) / 8 = 6.4 GB/sec. With a 333 (1333) bus the speed will be 10.6 GB/sec.

- Memory speed.

A computer often uses 2 memory channels. For this reason, it is better to install 2 or 4 RAM sticks. Two memory channels with DDR2 800 MHz sticks will give a theoretical speed of 12.8 GB/sec. But, firstly, dual-channel is not used very effectively, and secondly, the memory itself is not very efficient. The efficiency coefficient is on average somewhere around 0.7 (depending on memory timings and chipset settings). Which in practice will give somewhere around 8-9 GB/sec. There are no problems here for processors with an 800 MHz bus; the memory even covers the bus capabilities. But the 800 bus is a problem in itself, because it is slow.

- In addition to memory, the processor also needs to “talk” to the video card, and the video card needs to “talk” to the memory, which again clogs the processor and memory bus.

Plus, the chipset, which is the connecting link, may not be very optimally configured, and will reduce memory speed. There is a DDR3 memory option with significantly higher bandwidth and capacity. But the narrowness of the processor bus and the subtleties of the chipset do not go away. DDR4 memory has recently appeared. It has even greater speed (and volume). But motherboards with DDR4 support for processors with an LGA775 socket have not been produced and are not being produced.

Given all this information, you can think about how to increase system performance. There are several options here.

How to increase system performance?

Overclocking

- this is an increase in system performance beyond the nominal by various methods, except for replacing components.

Upgrade

— increasing system performance by replacing components with more efficient ones. Modernization, renewal.

That is, if you have good components, you can change some settings so that, for example, the processor bus will be not 800 MHz, but 1200 MHz, and then the processor frequency will become not 2.6 GHz, but 3.9 GHz. That is, +50% productivity. This is acceleration. Not bad, right? And this is completely realistic. But in each case, overclocking is individual. A lot depends on the capabilities and parameters of the components. Often, for stable operation it is necessary to increase the supply voltage of the processor, chipset, and sometimes memory. This depends on the degree of overclocking of the system. With significant overclocking, you have to improve cooling, which means resorting to some kind of upgrade.

What are the problems in this case?

- The need for good components, for example, a functional motherboard with high-quality elements, in the BIOS of which you can change a variety of parameters for fine-tuning.

- The need for high-quality RAM, capable of operating beyond its nominal value.

- Due to the increase in supply voltage, a more efficient cooling system is required.

- It is necessary to check the stability of the system during and after overclocking.

- Overclocking disproportionately increases the heat output of the component it is applied to. Due to heating, the service life of the part is reduced and the risk of premature failure increases. However, as practice shows, with “careful” overclocking, the risk of device failure is extremely low.

As for the upgrade. There are several nuances that need to be taken into account:

- You need to find out whether the motherboard supports the processor you plan to install. For a specific motherboard model, this information can be found on the motherboard manufacturer's website. The corresponding item is usually called “CPU Support”. Where supported processors, TDP of processors, processor revisions, BIOS versions that add support for a particular processor are listed.

- You need to decide on the processor cooling system. So, for example, if you have a Core 2 Duo processor with a TDP of 65 W with a cooler designed to remove heat from this processor, then when installing a Core 2 Quad Q9550 processor with a TDP of 95 W, you may need to replace the cooler with a more efficient one so that the processor does not overheat.

- You need to decide on RAM compatibility. Since when installing a processor with a bus frequency of 1333 MHz, it is necessary for DDR2 memory to be at least 667 MHz. There is no such problem with DDR3 memory.

You can talk about which processor to choose and how to overclock it in the conference.

Let's look at what overclocking a dual-core can give. To do this, compare the Core 2 Duo E4300 1.8 GHz with the Core 2 Duo E6850 3 GHz. So, if you overclock the E4300 on the bus to 333 MHz (1333), then it will become approximately equal to the E6850 processor without overclocking.

[click on the picture to enlarge]

As you can see, productivity has increased significantly. Both in single-threaded applications and multi-threaded ones.

What happens if you replace the processor with a 4-core processor with a frequency of 3 GHz? Those. make a small upgrade. No overclocking.

[click on the picture to enlarge]

As you can see, in single-threaded applications the performance has changed insignificantly, but in multi-threaded applications it has increased almost twofold. Thus, it turns out that overclocking the processor almost linearly increases its performance. Replacing the processor with a 4-core processor of the same frequency doubles the performance in well-paralleled tasks. But you can also overclock a 4-core processor! However, here the requirements for the quality of components increase significantly. The 4-core Core 2 Quad Yorkfield generation (second generation, 45 nm) accelerates to 3.8-4 GHz on average. Which adds about 30% more productivity. Core 2 Quad Kentsfield (first generation, 65 nm) are overclocked to ~ 3.4 GHz, but some copies of the first revisions of these processors did not exceed the 2.8 GHz mark.

Here, for example, is an overclocking upgrade: 2-core E2160 1.8 GHz overclocked to 3.4 GHz versus 4-core Q9550 (Xeon X3363) 2.83 GHz overclocked to 3.82 GHz.

was:

[click on the picture to enlarge]

became:

[click on the picture to enlarge]

Result from changing the CPU and overclocking E2160 3.4 GHz => X3363 3.82 GHz:

ICE STORM: 52219 => 125504 (+140.3%) CLOUD GATE: 7246 => 13735 (+89.5%) FIRE STRIKE: 3834 => 4344 (+13.3%)

* The Fire Strike test is very demanding on the performance of the video card due to the very high quality of the graphics, which is why the performance increase is so small, since the video card became the weak link in this test.

Pages: 1 | 2

Top

Discuss in the conference

Layout:

Dmitry Lyukshin (Tester)

Third person action adventure

Batman Arkham Origins/Arkham Knight

The noir action game with the “dark knight” pleased gamers with its plot, gameplay, and graphics.

In Arkham Origins, the optimization turned out to be acceptable - even on budget Intel Pentium processors, the frame rate remained suitable for a single playthrough. True, the game was only “playable” on the latest video cards at that time, Radeon HD 7770/GeForce GTX 650 and higher - former AMD flagships of the “six thousandth” series, for example, were disgraced and showed too low fps in Full HD resolution. But the subsequent game Batman: Arkham Knight will go down in history as an example of a mediocre port from consoles to PC. So mediocre that the game even had to be recalled to correct critical flaws. Until this time, assumptions that the middle-aged Unreal Engine 3.5 was capable of bringing productive computers to their knees seemed like a joke.

Batman: Arkham Knight (2015)

As a result, the corrected version of the game was released on PC months after its debut on consoles, it became more stable, but did not get rid of gluttony - with high graphics detail in Full HD, the game consumed over 3 GB of video memory and required a video card of the Radeon HD 7970 or GeForce GTX 780 level. In this case, the demands on the processor remained moderate - even dual-core Intel ones were enough to ensure that the number of frames per second did not drop below 40 fps.

General principle:

The video card is more important than the processor. High detail, even in Full HD, is only achieved by truly powerful video accelerators.

What changed?

In the new part of the game, at the time of release, instead of “new budget” video cards, slightly outdated flagships began to demonstrate the minimum acceptable fps.

Tomb Raider/Rise of the Tomb Raider

This re-release of the game about Lara Croft today looks frivolous from a technological point of view - at maximum detail, Tomb Raider 2013 can handle even the outdated and cheap video accelerator GeForce GTX 950M, originally from laptops. But four years ago, in 2013, the Crystal Engine in Full HD resolution became an impossible task for all budget-class GPUs. And with AMD TressFX technology, which makes the main character’s hair soft and silky, almost all video accelerators, with the exception of flagship ones, are “blown away”. And video memory consumption at 1080p was limited to an impressive 2 GB at that time. But the game didn’t spare processors either. Moreover, a comfortable frame rate in the first versions of the game was only possible on quad-core processors. Unheard of impudence for 2013! In later patches, the game was made less demanding on the CPU, and exploring tombs with a Core i3 or older Pentium became a feasible task.

Tomb Raider (2013)

The Foundation Engine in Rise of the Tomb Raider abused the video accelerators of 2020 in much the same way. Video memory consumption in DirectX 11 jumped beyond 3 GB; only the newest video cards slightly older than the middle-end class produced frame rates suitable for Full HD gaming. And the DirectX 12 mode “delighted” gamers with memory leaks, as a result of which the game consumed all 6 GB of VRAM in the flagship video accelerators of 2020!

Moreover, DX12 did not bring relief to processors either - while in DirectX 11, quad-core AMD budget phones and dual-core Core i3 felt comfortable, then with the activation of the new API, inexpensive models dropped out of the competition, and only the miraculously surviving “Hyper Threading is our everything” demonstrated suitable fps for the game. Core i3 and much more expensive processors from the “blue” and “red” camps.

General principle:

The video card is more important than the processor.

There is no such thing as too much video memory. What changed?

Instead of “at least some” four cores, the game began to require high-performance CPUs or at least high-frequency Core i3. DirectX 12 slightly improved picture quality and sharply worsened the performance of processors and video cards in RoTR.

Auto racing

Need for Speed: Rivals / Need for Speed (2015)

Trite?

And how! But still, NFS is such a convenient pipeline that makes it convenient to navigate the trends of racing games. NFS Rivals became the first game in the series on the “Battlefield” engine Frostbite 3, only, to put it mildly, with its own interpretation. The developers set a limit on the frame rate of 30 fps - either to make the picture more “cinematic”, or in an attempt to wean PC players from paying attention to graphic settings. No anti-aliasing, no smooth images, no SLI support - the Ghost Games studio clearly felt uncomfortable working with the new engine.

As a result, the “ultra-modern” graphically Need for Speed worked comfortably on mid-class video cards and... that’s it, we hit 30 frames per second. But enthusiasts found a way to get around the limitation, so the competition ended with sub-flagship video cards that reached the 60 fps limit. Quite nice, especially since the video memory consumption in the game is not far from the usual 1 GB.

Need for Speed (2015)

But with the requirements for the processor, things were different (although it would seem - racing games, why do they need a powerful CPU?), because with the frame rate unlocked to 60 fps, dual-core chips could no longer cope with the load and for a comfortable game they already needed at least high-frequency AMD FX-6100 or Intel Core i3. The situation was approximately the same in Battlefield 4, which was released on the same engine. Another thing is that for a dynamic shooter, “borderline” 30 fps is too low.

The belated PC port of 2020's Need for Speed finally put to rest the questions of "why is Frostbite needed in racing games?" Because it’s beautiful, very beautiful! With the engine fully modified, the game began to eat up as much as 3 GB of video memory, but did not change in its essence - for a comfortable game, a mid-class video card (GeForce GTX 960/Radeon R9 280X) is enough, and either Core i3 or AMD quad-core with high clock speed. Such processor requirements, by the way, made the new NFS “unplayable” on a huge number of laptops. But nothing can be done: Frostbite is also outside the Frostbite battlefield.

General principle:

The processor is more important than the video card. Levels of graphic detail are barely visible to the eye.

What changed?

Memory consumption has increased, but the status quo (“I use a little less memory than in mainstream video cards”) has not changed. The processor requirements have increased slightly with engine improvements and unlocked frame rates.

Project CARS

Of course, it would be interesting to compare the creations of games famous for optimization by Codemasters (GRID 2/DiRT Rally), but the differences in such games come down to only nuances - the same engine, slightly more flexible system requirements for the 2013 game. However, it depends on how you look at it - in 2013, to play games without frame rate drops, you needed a video card of the Radeon HD 7850 level, which was middle class. And among processors, the game gratefully gave preference to quad-core processors, although it maintained acceptable fps on dual-core CPUs. In 2020, similar system requirements mean that DiRT flies even on budget gaming computers.

Project CARS (2015)

With Project CARS the situation is different, because the game, for the development of which “the whole world” raised funds, has become one of the most beautiful and demanding car simulators of our time. But its engine grew out of old parts of Need for Speed - for example, Shift Unleashed from 2011!

The graphic settings are overwhelming, and there is no way to manually select “high” or “maximum” presets. With a packed field of rivals, bad weather on the track and extremely high-detail graphics, Project CARS looks like a documentary about auto racing, and such beauty requires sacrifice. Lots of expensive GPU sacrifices - something between a GeForce GTX 770 or a Radeon R9 280X. That is, CARS require graphics cards that are slightly above average at the time the game is released. The game doesn’t stand on ceremony with processors either - Core i3 is the minimum “entrance ticket” and preference is given to quad-core processors with high frequencies.

General principle:

The video card is more important than the processor for high performance.

Open world sandbox games

An exaggerated name, but you understand what games we mean? The ones in which the developers boast of simulating the lives of random characters on the streets. Games in which a seamless world is covered with secondary tasks along with a developed storyline. Gigantic scenery and large-scale, let's say, dramaturgy.

Assassin's Creed IV/Assassin's Creed Syndicate

When Far Cry 3 was already obsolete and Watch Dogs had yet to arrive, Assassin's Creed was one of Ubisoft's premier open-world games.

By 2013, the main characters, however, had become strange (Indians and pirates are also a bit of assassins, although they had nothing to do with the Ismailis), but this is normal - the team of heroes from the movie “Fast and Furious” also moved from street racing to effective entrepreneurship. Even in those years, the Anvi game engine was something akin to the constantly overgrown Call of Duty skeleton, but this absolutely did not prevent the game from being one of the most hardware-hungry among all the titles that came out in 2013. Radeon HD 7970 and GeForce GTX 770 as an entry ticket for playing in Full HD with high quality is a so-so optimization, I must say. And among the processors, the game preferred quad-core ones with a higher frequency. At the same time, more than four threads in the processor miraculously pulled down the CPU results, so the fastest chips in Assassin's Creed IV turned out to be Intel Core i5. Everything, except the amount of video memory, in the computer had to be “expensive and rich” in order for the game to work properly.

Assassin's Creed Syndicate (2015)

However, such fun did not last long - in Assassin's Creed Syndicate, the developers had to seriously engage in optimization, because the previous AC: Unity had just become a meme with prohibitive system requirements and a large number of bugs.

As a result, the game began to consume 3 GB of video memory already and required, it’s a shame to say, a GeForce GTX 960 as the minimum acceptable option for “very high 1080p). But it became much more loyal to processors - even cheap Intel Pentiums coped with the load perfectly.

General principle:

The video card is more important than the processor. If you want to play well, buy above-average video cards.

What changed?

The developers have optimized the game for more efficient use of the GPU and thus relieved the processor.

Grand Theft Auto V

To understand why GTA 5, despite all its pomp, was well optimized, just look at the epic port from old consoles, that is, the epic debut of the fourth part of the series on PC in 2008. It was simply impossible to find a more mediocre game in terms of processor requirements - you only needed an Intel Core Quad (which was expensive, like a Core i7 today) in order for the mediocre port from the console to toss and turn with more or less acceptable fps. Millions of gamers around the world cursed Rockstar for optimizing the game in such a way.

Grand Theft Auto 5 (2015)

GTA 5 came out on PC almost a couple of years after its debut on old-generation consoles, which means the developers had plenty of time for a quality port. By that time, the Rockstar Advanced Game Engine was “polished” for current hardware, so the game, although it consumed an indecent amount of video memory (over 2 GB in Full HD), ran without problems even on budget video cards, such as the GeForce GTX 750, for example . GTA 5 also did not experience any problems with performance on dual-core processors. An outrageous ease of existence by the standards of the PC industry, isn't it?

General principle:

low requirements for components, high requirements for video memory. At the same time, the video card is more important than the processor - the lesson with GTA IV's gluttony for the CPU was not in vain for the developers.

Graphics card and professional applications

You can get by with the graphics built into the processor in office editors and accounting programs. Graphics tools (both 2D and 3D) can support GPGPU (general purpose computing) acceleration. For example, this function is available in Adobe Photoshop, Media Encoder and Premiere, AutoCAD, Sony Vegas and other professional applications.

Difference in encoding speed with and without GPU

Check on the website of the developers of your tool whether CUDA, GPGPU, OpenCL and other hardware acceleration techniques using GPU are used. If there are any, the video card is as important as the processor, because it contributes to faster software operation. This is especially noticeable in heavy projects, which even the powerful Core i7 or Ryzen 7 are not easy to handle.

If your work tools cannot use graphics for calculations, then a powerful processor is much more important. A conventional Core i7, with a built-in or the cheapest graphics card, is preferable to a combination of an i5 and a flagship gaming GPU.

Strategy games

The vast majority of strategies either torture the processor without special requirements for the video card, or are so frustrating with optimization that even top-end hardware cannot save the situation. The latter cases include the real-time strategy Total War (Rome II, for example, which “raped” the hardware without any particular graphical reasons) or the recent XCOM 2. Give them powerful quad-core processors and mid-level video cards (GTX 960, at least ) for comfortable gaming in Full HD. The developers convince players that this is a “bug feature,” and the public is indignant. True, such optimization becomes rather an exception to the rule, and we will follow the rules in other popular titles.

Civilization Beyond Earth/Civilization VI

The fifth part of the turn-based simulator “rewrite history in your own way” was released back in 2010 and at that time was unusually demanding by the standards of strategy games - it happily consumed over 512 MB of video memory and preferred either new mid-class video cards (GeForce GTS 450) or old ones flagships (GeForce GTX 285) in Full HD resolution. Processor performance has become a separate “pain in the butt” for fans of the series, because without a quad-core CPU (or a good dual-core with four threads), Civilization was heavily conceived when changing moves in the later stages of game progress. Now you can ask “so what?” to this, but in 2010 even the “folk” high-frequency Core 2 Duo and AMD Phenom X2 did not get along well with the game.

Civilization VI (2016)

But Beyond Earth, released in 2014, which was the fifth Civilization in a new setting, was a surprisingly lightweight game for modern hardware. Even a cheap Radeon HD 7770 easily crossed the 30 fps limit, and more was not needed for a turn-based game. And budget dual-core Intel Pentiums based on Haswell architecture easily handled the load of a game that was once power-hungry for desktops.

In the case of Civilization VI, the change in game generations looks strange - the graphics have clearly not become better, but the system requirements have grown to match the times. No one is offended by the need to have a “mid-range” GeForce GTX 950 for Full HD resolution, but why the processor load has become twice as high since Civilization 5 is a mystery. In any case, you can no longer play comfortably on dual-core Intel processors - you need a processor of at least a Core i3 level. By the way, the video memory capacity of the new game is prohibitive - up to 4 GB in Full HD, and this is with a cartoon design!

The evolution of graphics in Civilization V and VI

But support for DirectX 12 in Civ 5 was not a mockery of the hardware, as in the Rise of Tomb Raider, but a truly useful way to reduce the load on the processor - up to a 10-15% increase in fps on DirectX 12 compatible configurations.

General principle:

The processor is more important than the video card, although the GPU requires a lot of video memory.

What changed?

The load on the CPU with changing parts of Civilization grows faster than on the graphics adapter, but support for DirectX 12 allows you to significantly “free up” the processor.

StarCraft II: Legacy of the Void

Truly popular real-time strategy games stay fresh thanks to DLC/remastering despite the year the original was released. This is how “Cossacks 3” is structured, and the second part of StarCraft, which comes from, scary to say, 2010, has gone through a similar path to “Civilization” from a system-demanding new product to an elementary game that can be run in Full HD even on integrated graphics. Therefore, the pleasure of one of the best RTS is inexpensive - an already middle-aged GeForce GTS 450 or Radeon HD 7750 is enough to not deny yourself anything in 1080p.

StarCraft II: Legacy of the Void (2015)

In the case of processors, we observe a situation that is funny in these days, when the number of cores is not as important as the performance and frequency of each of them. In general, the Core i3 whistles ahead of eight-core AMD chips and is almost equal to the older Intel chips in terms of frame rates.

General principle:

The processor is more important than the video card, the load on the GPU is very low.

What changed?

Nothing! The game still lives in the past and loves fast dual-core processors without “rocking” the new hardware properly.

What is a processor?

The central processing unit (CPU), also called a "processor", executes and controls the instructions of a computer program, performing input/output (I/O) operations, basic arithmetic and logic. An integral part of any computer, the processor receives, routes and processes computer data. Because it is usually the most important component, it is often referred to as the "brains" or "heart" of a desktop or laptop computer, depending on which part of the body you consider most important. And when it comes to gaming, it's a pretty important component of a gaming system.

Historically, processors only had one core that would focus on one task. However, modern processors have from 2 to 28 cores, each of which is focused on a unique task. Thus, a multi-core processor is a single chip that contains two or more processor cores.

And processors with more cores are more efficient than those with fewer. Dual-core (or 2-core) processors are common, but processors with 4 cores, also called quad-core processors (such as 8th generation Intel® Core™ processors), are becoming increasingly popular.

MMORPG, MOBA and free-to-play games

Games that focus on mass appeal remain the most hardware-friendly even in 2020. Dota 2 runs without problems on the cheapest video card of the new years (Radeon HD 7750) and at least some dual-core processor, World of Tanks is content with below-average video cards (GeForce GTX 750 Ti) and processors slightly better than the budget Intel Pentium of the new years. The online shooter Overwatch behaves in a similar way, so even the most budget configuration will be sufficient for massive online and free-2-play games today.

In mass online games, system requirements fade into the background - the game must start and work for any more or less solvent audience

What about the rest of the components?

The processor and video card in the computer are not the same, but they are the “foundation” of a gaming computer.

When choosing a power supply, you need to look at the power (there are countless calculators), efficiency and current on individual lines. In general, this is a separate conversation with its own nuances. In order for games to “just work normally”, a budget RAM Kingston ValueRAM is enough; sets with high frequencies allow you to play a little more fps “without being able to”, and overclocker memory meekly withstands high loads and for this reason will please those who look at frame rate not from the standpoint of “it’ll do for me anyway,” with the aim of “it can be done even faster.”

For budget-conscious gamers, the inexpensive Kingston UV400 is suitable as a system drive. To speed up game loading, it is advisable to get HyperX Savage

The SSD does not directly affect the number of frames per second - it affects the speed at which levels load. The larger the game world, the more noticeable the difference. Therefore, even an inexpensive HyperX Fury will help you arrive on the battlefield faster in online games or spend less time looking at slideshows accompanied by music while the computer brings the game into combat mode.

The cooler the drive, the more noticeable the difference, even if “on paper” a few seconds seem trivial.

What is the "main" difference between CPU and GPU

While the CPU uses multiple cores focused on sequential processing, the GPU is designed for multitasking; it has hundreds to thousands of smaller cores to process thousands of threads (or instructions) simultaneously. Some processors use multi-threading technology (specifically Intel Hyper-Threading), which allows one processor core to operate as two separate virtual (or "logical") cores or threads. The idea is that they can share the workload among themselves and increase the number of instructions acting on individual data when executed concurrently, which improves performance.

We learned a lot today

You see a new game from Electronic Arts - expect a Frostbite engine inside and high processor requirements with a modest appetite for a video card.

If you see a game about “stalkers” - prepare a flagship video accelerator and CPU or tolerate reduced graphics detail. If you want to be Batman, prepare a powerful video card, but the adventures of the beautiful Lara Croft are also fraught with irrepressible consumption of video memory. If you love Battlefield, love Need for Speed (the performance is the same), but be prepared for the fact that for truly cool graphics in racing games you will need a video card no less cool than for shooters.

The old Ubisoft sandboxes are Ubisoft's voracious sandboxes. In new games, it is already possible to save on the processor.

GTA has long ceased to be a “crooked port from consoles” - an average computer with a graphics accelerator with three or four gigabytes of video memory is enough for it. Strategies on a PC are an unpredictable thing: some of them are designed by incompetent studios, so the games “slow down” on any components, some are remakes of old games that do not require powerful hardware.

And only massive online games (especially pay-to-win) will welcome PC players into open arms with hardware of almost any level. But all these conclusions do not answer the main question:

Conclusion

For simple tasks, a powerful graphics card is unnecessary. Professional software already requires you to delve deeper into the specifics and determine how important the GPGPU functions and graphics power are in a particular tool. Therefore, it is impossible to give a single answer as to what is more important, the processor or the video card.

If we talk about a purely gaming machine, FPS is much more dependent on graphics power than on CPU cores. So, if you need to choose what to save on, it’s better to buy a more affordable processor. This is more rational from a budget point of view. But there is no need to go to extremes, since although the video card is more important, you can’t cook porridge with a cheap dual-core CPU either.