Sound is a physical phenomenon that travels through space in the form of waves and is perceived by the senses. It can be in the form of speech, song, music. The quality of the reproduced sound determines its effect on perception. It can be both positive and negative.

It is quite unpleasant to listen to a piece of music with extraneous noise or an online webinar of a presenter with a voice like “out of the barrel”, etc. But for one reason or another, such things happen when editing a media file, recording a track, master class, vocals or creating a musical composition. Therefore, the editors of the “I Found It” website have prepared for you a review of the best programs for working with sound for 2020.

Why do you need audio software?

First of all, such programs are convenient for everyone involved in the music and film industry. They make it possible to process sound without creating a recording studio.

To create audio content: music, film scoring, live sound, its composition, tracks, to create high-quality sound effects. Samples (samples) - sound samples from which, using programs, you can create musical compositions, individual melodies, instrumental parts (sample-based); with the ability to use virtual musical instruments; for recording audio files on hard drives (disks), converting files to other formats, compressing or stretching fragments; creating scripts (audio files in the form of text), etc.

To translate dictated oral text into written text.

A wide selection of applications and programs is very convenient for beginners and amateurs: for practicing music and developing personal talent in the musical field, designing greeting videos, creating compositions for performances, recording vocals, trying out DJing, etc.

Such programs provide the opportunity to study music without attending clubs, courses, or master classes.

Sound processing programs

Let's look at several free programs that allow you to process sound; you can get them from the Internet and they are easy to use.

FreeAudioDub

This program can be found on the official website by entering the name FreeAudioDub in any search engine.

The program is designed for cutting audio files, it is of high quality and convenience.

Using FreeAudioDub, you can cut an audio recording without re-decoding.

FreeAudioDub is easy to use and allows even beginners to use it.

Mp3DirectCut

The utility, which allows you to edit music files, is very easy to use, allowing it to work for those users who only need to, for example, trim the desired music for their mobile phone.

Despite the small size of the program (approximately 200kb), it has about 20 interface languages that can be selected when installing it.

After cutting, just save the resulting file in MP3 format.

The settings allow you to set the following parameters: audio track bitrate, mono/stereo channels.

The disadvantages include working with files only in the MP3 format.

You can also get the program on the official website by entering Mp3DirectCut in the search bar.

Movavi Video Editor

The most convenient video editor for adding voiceovers and adding audio recordings to a ready-made video.

Movavi’s fairly simple interface and settings make this editor popular; even a beginner can handle these settings. Moreover, this editor has all the necessary functions for processing video and audio files.

First, you should go to the official Movavi website and add the editor to your computer.

Then, in the editor, in the “Import” tab, add (by dragging and dropping) your video.

It’s better to add voiceover using a headset, so the sound will be clearer, but if you don’t have one, you can also add voiceover using your computer speakers.

And so in the Device item, select the sound method: Speakers or Microphone.

Then, using the red mark on the scale, select from which point in the video to start dubbing, click on the Sound Recording icon and start recording.

It is also important to adjust the sound volume, and if the original video contained other noise that you do not need, you can turn it off by clicking on the speaker icon. This must be done in the Tools tab.

After you have recorded and configured the sound, you should save our video as a video file.

To summarize, it should be noted that a huge number of programs for working with sound allow you to process sound depending on your goal.

If we talk about professional processing, this includes noise reduction, applying sound filters and reverberation.

For the average user, you can use free utilities in which you can simply add, trim, copy and convert audio files.

How to choose

To choose a suitable sound processing program, you need to get acquainted with what they are in principle and with what functions, with their characteristics and capabilities.

general review

Based on consumer needs, all programs can be divided into two large types:

- professional

Designed for DJs, composers, arrangers, with extended functionality. As a rule, they have paid features that require purchasing a license.

They are distinguished by their power and satisfy all the needs of a professional consumer in creating and adjusting music, high-quality processing, creating a rhythmic basis, background, and sound special effects.

Designed for installation on a computer.

- Amateur programs

Convenient for testing your strength, gaining experience for beginners and using for household creativity. They do not require investment and are equipped with a fairly wide range of functionality. Suitable for laptop, phone, smartphone.

Functionality

The functionality of the programs includes:

- music recording;

- change in sound timbre;

- pitch;

- melody tempo;

- adding effects;

- mastering - improving the sound or adjusting to a certain standard;

- compression is a component of mastering, speaker editing, volume leveling with

- equalization - also included in mastering, changing the amplitude and frequency using an equalizer;

- mixing - combining individual tracks into a common composition, overlaying speech or other sounds on the main background;

- creating backing tracks;

- removing a voice from a finished backing track;

- superimposing your own or another voice onto a backing track;

- sound processing for webinars, Skype;

- change in real time;

- compression and stretching of the melody while maintaining the tempo;

- trimming, cutting, gluing, rearranging fragments;

- increasing the volume, enhancing the bass, adding high frequencies when the sound is dull;

- conversion (conversion) to another format;

- removing noise and unnecessary pauses;

- sound restoration on cassette, CD, vinyl records;

- adding effects (voice changing, echo, distortion, converting from mono to stereo, etc.);

- creating a copy of a music project;

- creation of scripts (for example, musical accompaniment when opening a site);

- adding and managing plugins;

- processing in real time - for webinars, communication on Skype, adjusting settings during recording.

Types of programs

According to the processing method, programs can be divided into:

- sound editors

They make it possible to record, digitize (convert to digital form for transfer to an electronic medium), correct rough audio material, mix, apply various effects to the main background, mastering, save in the proposed formats, and transfer to different media.

It is also possible to record sound in real time on a PC hard drive and change it with the possibility of digital processing.

- Audio restorers

A type of program that can be used to restore and restore old or damaged audio files from records, cassettes, and disks to working condition: removing rustles, clicks, noise, and crackling noises.

They clean and restore audio recordings from acoustic, vinyl and other noise, bringing the sound to its original state.

- Programs - audio analyzers

They help analyze and tidy up the recorded sound, increasing its quality.

With such an assistant, you can analyze the recording and identify low-quality sounds.

They are able to measure frequency and transient characteristics and distortion. Fast conversion is possible in real time.

- Sequencers

Designed for creating music, arranging it, changing the timbre of instruments, volume, time shifts, settings.

They consist of 2 parts:

- sequential - displaying an instrument playing at a certain moment;

- mixing room - shows the level of play and their regulation with the application of effects.

They are more difficult to adapt and require study of the user manual.

- Sound cards

Built-in or separate devices for computers, laptops. These are not programs, but they can be used to customize and improve the sound. Audio cards are necessary for listening to music, watching movies, communicating using a PC or laptop, playing games (3-dimensional sound).

The card is necessary for professional audio processing and playback, audio amplification. It has several connectors for connecting a microphone, headphones, speakers, synthesizers, and a musical keyboard. It is equipped with sensors, indicators, and regulators.

To expand the dynamic range, the bit depth of the card's DAC (Digital-to-Analog Converter) is important. The higher the indicator, the less sound distortion. There are 16, 24, 32, 64 bits.

Designed for laptops and personal computers.

Criterias of choice

Important parameters when reproducing sound include its purity and volume.

Such programs are created for audio processing on a personal computer or laptop. And also in mobile versions of programs and in the form of applications.

Before determining the criteria for choosing a program for working with sound, you need to decide on the priority goals of what you will be working with: recording vocals, creating music, translating voices into a text file, restoring audio recordings, etc.

Sound programs and applications exist on different operating platforms.

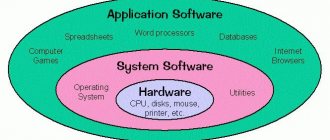

Platform selection

An operating system is a system of programs for user interaction with a device, which has a graphical interface.

The ability to install a particular program, application, etc. depends on it.

OS types:

- Windows

A common type of platform with a large number of programs. It has a familiar, intuitive interface. Sometimes it can be buggy due to viruses and bugs. Beautiful, practical and easy to use, easy to get to know.

- Mac os from Apple

Installed only on Apple devices, intended for professionals and for work. The advantages include the simplicity of its operation, without viruses. The positives include convenience for users, but it takes some time to adapt and get used to the interface.

- iOS

A widely known closed operating system for mobile devices from the Apple brand. It is installed on phones and tablets from this manufacturer.

The OS is distinguished by data confidentiality, a high level of security against malware, and broad functional capabilities. Capable of supporting a huge number of programs, high-speed, intuitive for the user, with a convenient file system.

The OS is regularly updated and runs smoothly, with a long battery life. Has a large number of applications.

- Android

Popular open OS from Google with the ability to customize and download free applications. It features a variety of paid and free applications, simultaneous operation of several applications, and constant updates. The ability to install programs without an Internet connection, with the Google Play application store, supports multi-user mode.

Volume

Each program requires a certain amount of space, which is measured in MB: 15, 45, 185, 238, etc. It all depends on its capabilities, interface (design), newness of the version, and manufacturer.

If your device does not have enough space to install, you will be prompted to clear it of other applications. It is worth evaluating and choosing priority ones for use.

Effects

In programs for working with sound, it is possible to apply various effects.

They can be standard or extended, enhancing the effect:

- overlaying sounds in the form of birdsong, the sound of water, the sound of rain or thunderstorms;

- creating music mixes with song overlay;

- enhancing the sound of the selected instrument;

- echo effect to create an atmosphere in the forest, mountains, cathedral, to emphasize the realism of the recording;

- the use of reverberation gives volume to the sound in the form of a recording in an empty or filled room, in a gorge, in the form of a mantra, robot, etc.;

- rotation of channels for listening with headphones;

- changing the tone to a higher or lower pitch.

Each effect can be adjusted using settings to the desired indicators. Listen, achieve what you want and only then save.

The variety of effects and their number depends on the type of application selected.

Advice:

For initial acquaintance with programs, you should not choose ones with a large number of functions, so that the time for adaptation does not drag on.

After acquiring initial skills, you can install more complex software.

Filters

This option in the product is necessary to increase the necessary and decrease unnecessary audio frequencies by creating reports with incoming and outgoing data.

There may be several of them:

- removing low frequencies that are higher than a given one;

- to reduce high frequencies below the required level;

- decreasing or increasing frequencies from a given parameter and below;

- decreasing or increasing from a given parameter and above.

The basis of the basic frequency filter includes:

- the above filter;

- resonance (cut width);

- its (cutoff) frequency.

Different programs may have different filters enabled for working with sound.

You need to try them. To understand what is needed in a particular case.

Interface

The user interface is the design of the program.

The clearer it is, the shorter the period of adaptation and use. This:

- window decoration;

- language - can only be English, or may include Russian and several other foreign ones. It is better to choose in your native language, Russian, it is understandable and convenient;

- function buttons.

The more intuitive the interface, the fewer errors there are in the process.

Plugins

Audio plugins are used to expand the capabilities of the program.

For example, the proposed save formats do not contain what is needed for downloading. This can be solved using a plugin.

You can also use them:

- add unusual sounds or voice effects;

- record a piece of music from a radio station on the Internet;

- create radio broadcasts on the Internet;

- play sound from any application, etc.

Plugins can be free or paid. They can also be downloaded from the Internet.

Saving format

After creating the required sound, after finishing working with the sound, it must be saved in the desired format.

Their difference is in the method of preservation:

- no audio file compression;

- with compression but loss of quality;

- compression and lossless storage.

There are quite a few of them, here are some:

- MP3 is the most common, present in any players and devices, maintaining good quality and not taking up much space. Able to reduce the size of an audio file, which is important at low Internet speeds.

- FLAC - takes up a lot of space, but allows you to maintain the original high quality of the work. The archiver has the characteristic of lossless compression of audio data, followed by complete restoration of the original data.

- WAV – saves a file without compressing it. Suitable for professional use because... saved sounds take up a lot of space, which is unjustified for the average user. The quality is maintained. This format is reproduced by all media players, incl. Windows.

- AIFF – from Apple, suitable for saving audio without compression, suitable for Mac OS.

There are several dozen types of conservation in total. Audio programs may contain up to several formats. The greater the number of them offered, the more convenient it is for the user.

The choice of the required format will depend on the type of device for further playback of the processed sound.

Conversion

Or conversion is the conversion of audio from one format to another. For example, MP3 to MP4, AVI, MKV, MOV, WAV, AAC, WMA, OGG, M4A, etc.

This conversion is necessary if the sound played on one device does not sound on another. Or when recording a whole list of audio works of different formats, to bring them into a single format for listening on one device.

If there is no need to process the sound, then you can use an online converter to simply convert it into the required sound format.

Or work on a downloaded convector, without using the Internet.

What is the price

Purchasing a private or collective license for sound processing and creating melodies will cost the following amount:

- from 5000 rub. for Avid Pace iLok 2 USB, a key for storing and authorizing software licenses for working with sound (Avid, Waves, Neyrinck, etc.);

- up to 26,490 rub. for Steinberg Nuendo Live, a professional audio software program.

Sound in video

Part 2. Elementary processing of the audio track

This article is partly a continuation of the previously published material “On-camera microphone, design.” In his conclusion, recall, I promised to describe microphone testing technology that can be used to evaluate homemade products and select a microphone from several models. When testing microphones, digital audio processing tools are used. Therefore, you need to start with processing. And testing is probably the next article.

Before moving on to the topic of the article, I will express my own opinion about the role, quality and role of microphone quality in video shooting.

When using cameras to record video, you can get by with the built-in microphone. It provides the minimum possible quality, sufficient for everyday photography. The main disadvantages of the built-in microphone include the following: poor sound insulation from the body (which leads to the recording of mechanical noise from the operation of mechanics and the work of the operator’s hands) and the lack of a special “correct” design of the acoustic channel input (which leads to an unpredictable dependence of sensitivity on directionality), and The advantages are reliability (it cannot be forgotten and is difficult to break, no need to worry about the serviceability of connectors and power supplies) and a control interface built into the camera (saves time and effort when preparing for shooting).

The next level of quality is inexpensive plug-in passive microphones without directivity and gain control (they do not have to be mounted on the camera, these can also be lavalier versions). Their main advantage over built-in ones is acoustic isolation from the camera body. If performed well, such a microphone will not record stray sounds from camera mechanics and the work of the operator’s hands, and a properly designed acoustic input will provide natural sound without highlighting frequencies and directions. Disadvantages, like other connectable ones: possibility of damage to electrical connectors and attachment to the camera; the possibility of interference due to poor or incorrect shielding of the cable and connectors.

Finally, the most you can expect from plug-in microphones is an active device, with its own power supply, amplifier, channel mixer, “calculated” acoustic resonator and/or multi-capsule system to provide the desired directionality. The advantages are clear from the description - the recording parameters can be adjusted very precisely. Disadvantages: high price and excessive complexity, which distracts the operator from managing the shooting. However, the latter is rather a matter of training and a common complaint about complex customizable devices.

And an even higher level of quality than on-camera microphones will be provided by a separate recorder for synchronous sound recording and an operator for it.

It seems to me that for a person who is not involved in professional video shooting, the second option and the most basic devices from the third category - simple passive ones or with a basic built-in microphone amplifier - are quite sufficient. Secondary qualities, and the sound in a video is still secondary, should not require a lot of attention and effort to the detriment of the primary ones. It is extremely difficult to simultaneously monitor both the picture and sound while shooting using visual level sensors or headphone monitors, and it can be argued that in most cases one operator for both video shooting and sound recording will not be able to fully use the functionality of complex expensive microphones. Therefore, the result - the film's soundtrack - will not be fundamentally better than using a simple microphone that is well acoustically isolated from the camera and does not greatly distort the sound. From my own experience (non-professional work), I have repeatedly been convinced that the quality of sound recording by different devices depends more on the correct setting of the volume level and subsequent processing than on the sophistication of the microphone. An analogy can be drawn with amateur photography - a photo with auto-balance of all parameters almost always wins in overall impression compared to correctly processed RAW. The nuances are lost, but the first impression from the picture allows us to say: the “automatic” is better. But sound nuances differ worse than image nuances. For most listeners, the absence of interference and noise, the correct volume level and minimal frequency balance mean “professional quality”. Almost all of these qualities can be achieved or improved during post-production of a film's soundtrack.

To process the audio track of video files, I use Audacity and Avidemux programs - free, distributed under the GPL license and running on Windows, Mac and Linux platforms. These programs are quite simple, but their functionality is quite sufficient for basic (and not only) video and sound processing. It’s easy to master them both by trial and by using existing manuals.

The Avidemux program is needed to extract the audio track from a video file and save it separately for processing, and then put it back in place. Audacity is used to analyze and process an audio file.

Preliminary remarks

When processing the audio of a video file separately, you should use an intermediate audio file of at least “quality” than the original audio track. My camera (Canon EOS 600D) writes video to a MOV file container with audio saved in PCM with the configuration "2 channels / 48 kHz / 16 bits". As an intermediate file I use wav (for Windows) of the same configuration (2 channels / 48 kHz / 16 bit). It would be possible to use a “higher quality” intermediate file, but I think that for the existing camera and microphones - 48 kHz / 16 bit is more than enough. However, if the computer is powerful and there is a lot of free disk space, then nothing prevents you from improving both parameters - both the sampling frequency and the digitization bit depth.

I did not encounter any issues with timing misalignment when processing the audio and video streams in the final video file, even after processing separately. But if this happens (which is quite possible), I believe that the situation can be corrected using the Audacity speed changing tools (if the reason is precisely the speed mismatch) or using the video editor tools if stream synchronization fails (using the simplest techniques such as “clapperboard” - “Episode one, take two – Clap!”). Since I have no experience in this, I can only mention that such troubles do happen and can be dealt with.

Extract and embed audio track from video file

I will illustrate the sequence of extracting and embedding an audio track using Avidemux with screenshots for this process:

To extract the audio track into a file with suitable parameters (in my case, the PCM option), select the PCM format in the Audio window (1), then in the Audio submenu, Save (2) and then the location and name of the intermediate audio file in the Save dialog submenu (3)

To embed a processed audio file as a video track, you need to: open the video file, select the Audio submenu, main track - Main Track (1), in the next submenu that opens, select an external file - External file (2). Save the video file with the new audio track through the File menu (3)

Processing an audio track in Audacity

In order not to degrade the quality of the audio track of a video file, you need to follow simple rules when shooting a film. When manually controlling the signal level, you should set a value that will be the maximum possible (so as not to apply subsequent software gain) and at the same time the peak values of the signal amplitude will not reach the maximum level possible for the recording device (0 dB). Of course, it’s not easy to predict how the events in the film will develop, and therefore the recording level is chosen so that the peaks do not go beyond a certain non-maximum value, for my camera this is the −12 dB mark (see the article “Canon EOS 600D: shooting video” ). With a high probability, for standard scenes, with this setting, the audio track will turn out to be loud enough and without overload, leading to unpleasant distortion. Another rule is to structure the film scene so that the sources of stray noise are away from the main sound source. The microphone, built into the camera or mounted on it, has some directional sensitivity in both signal level and frequencies. As a rule, a mono microphone (for example, built into a camera) has the least distortion and the best sensitivity in its front direction. For a stereo microphone, the front direction is also the main one, although the relative position of its two (or more) capsules can modulate the sensitivity diagram. The third rule is to “exclude” all possible extraneous sounds when shooting: the operation of camera mechanisms or a stabilizer/tripod, the sounds of the operator’s hands and clothes touching the camera, etc.

Since it is not always possible to strictly follow the listed rules, you have to edit the sound in the editor program, and it is clear in advance what will have to be edited: signal level and distortion from signal overload, random noise (clicks, crackles) and systematic (hum of interference, electronic noise, background natural noise, etc.), spectral distortion. All this can be done with varying degrees of efficiency in the Audacity program, which the program manual will help you master.

Most general sound correction operations can be performed using the Effects menu commands. The most commonly used commands are: sample noise removal (1), signal normalization (2), signal amplification and equalizer (3). The program carries out elementary analysis of the recording without calling special commands: if “Show overload” is enabled in the “View” menu, fragments with overload distortions will be marked in red (8)

The “Remove Noise” module (4) (as a rule, used first in the processing sequence) works according to a much more complex algorithm than simply cutting off the most noisy frequencies (which is also implemented in the program). But to use it, there must be a small piece of pure noise in the audio recording (this needs to be recorded in advance during the filming, which is not that difficult). To intelligently remove noise, you need to select a fragment of only the noise (you need to understand that this is both electronic noise and background noise - “streets”), call the “Remove Noise” module, click “Create a noise model” (5). Exit the audio track selection mode for general editing or select only that fragment of it that requires noise cleaning, call the “Remove Noise” module again and click “OK”. Like most Audacity effects, this one has controls for the degree of impact and a preview function. The effect of noise elimination is “noticeable” not only audibly, but also visually: (6) - a fragment of the selection of “pure noise” in the original signal, (7) - a fragment after noise removal. “Noise removal” can also be used if there is no “silence” recorded during shooting. The microphone’s own noise (but without the “street” noise) is not difficult to record separately and incorporate into the edited recording. In this case, when recording “noise,” you need to try to repeat the settings (recording level, other microphone settings, if any) of the sound when recording the film.

Adjusting the sound using the Equalizer. It is possible to visually represent the correction using a curve (1) or using the usual sliders (Graphic EQ mode). Equalizer function settings are made through the menu (2), which presents standard sets of settings for common situations (radio, telephone line, etc.), and preview the noise removal effect

“Overload” (strong, that is, clearly audible) is one of the most unpleasant defects. As with overexposure in photos or videos, it can only be combated by replacing (painting over) a fragment of the overexposure. An overload fragment is replaced by an adjacent one or silence, mixing tracks is possible. However, it is better to avoid “overload” by following the recommendations of the creators of cameras or recorders (for example, the already mentioned “−12 dB rule”).

“Signal normalization” and “amplification” are simple, similar functions that are not difficult to understand. Both effects allow you to get the maximum desired signal level for the entire track (for the full range this is 0 dB, or 1 in Audacity level units), but, as I understand it, they use different algorithms that calculate and modify the signal envelope, and “normalization” is individual oscillations. Using “Signal Normalization”, you can eliminate the constant non-zero component of the signal (for those cases where there are no constant component filters in the recording path at the input) and separately amplify the left and right channels in a stereo recording (which can ruin a recording with a pronounced stereo effect and level it out) . I note that when experimenting with different microphones and my photo/video camera, applying “normalization” (after noise reduction) to different audibly audible recordings makes them practically indistinguishable and sounding “no worse” than before. So you shouldn’t neglect this tool in favor of a “softer” gain. You just need to configure it correctly so as not to lose the stereo effect. However, if there is no pronounced stereo effect, “Signal Normalization” perfectly compensates for microphone asymmetry (different sensitivity for the left and right channels).

Typically, a recorder or camera can operate in manual or automatic recording level control mode. “Professionals” naturally recommend manual. Their argument is this: in automatic mode, the silence of pauses can increase to audible noise, etc. But the manual mode is not all good: firstly, there is a risk of overload; secondly, when the sound source moves away from the camera, the sound level may become insufficient for subsequent viewing/listening. The first defect is not easy to deal with, but the second one can be easily corrected using the “Envelope Drawing” function.

Sound correction using “envelope drawing”. Select the “envelope” tool (1) and strengthen or weaken the signal where necessary, simply moving the envelope markers (2), created by clicking the mouse in the desired location. By default, both tracks of the stereo signal are corrected. To get an artificially enhanced stereo effect (so that the sound “walks” from one ear to the other, however, Audacity has a ready-made WahWah filter for this), stereo tracks can be divided, adjusted separately by level, and then mixed back into stereo

If the volume level changes relatively quickly, so that it becomes difficult to manually edit the envelope, you can try using the “equalizer” (with a simple interface) and “compressor” (with the ability to fine-tune the process of dynamic amplification/attenuation of the signal) to correct it.

Compressor effect. In the window for setting filter parameters, you can configure the signal transmission curve (horizontally - input, vertically - output, control: “threshold”, “ratio”), the degree of “reaction” of the filter to a difference in signal level (“attack duration” and “primary time”). attenuation"), as well as the signal level, which is taken as the “noise” threshold (a signal with a level below this threshold will not be amplified)

There are different approaches to the sequence of processing an audio track. I usually first eliminate noise, then perform a general correction of the signal level (using the signal normalization or signal amplification commands), then a local one using the “Envelope Change” tool, then I adjust the sound using the equalizer in the “negative” mode (the desired frequencies are not adjusted, but “ unnecessary" are weakened). With this sequence, you avoid returning to the same operation several times. An example of a sequence with repeated use of the same function could be: noise correction, slight general and local amplification of the signal or normalization to a level comfortable for perception and analysis by ear, equalizer in the “positive mode” (amplification of the desired frequencies), amplification to the level "1.0" (in Audacity scale units). Here the need to apply gain twice is due to the fact that there is gain in the “equalizer” in the “positive” mode. And if you apply a “positive” equalizer after boosting to the “1.0” level, there is a risk of “overloading”.

An example of using Audacity and Avidemux to eliminate noise (noise filter), tighten speech frequencies and attenuate echo - low frequencies (equalizer), signal compression (compressor).

Fragment without sound correction

Fragment after sound correction

Read about testing microphones and using Audacity for these purposes in the next part.

Installation

How to install on PC

All programs are divided into:

- paid

These are better software. With a free trial period, after which it stops working unless further payment is made. After payment, the entire set of functions becomes available, without interruptions in operation.

You should be careful not to end up with a free “pirated” version, which may contain malware or pop-up ads.

- Partially paid

The basic set of functions of such programs is free. But you will have to pay for additional options.

- Free

The most popular because of the ability to use while maintaining a budget.

To install the required program, you must enter its name in the Yandex or Google search engine. From the drop-down list, select a program from the official website. This is the fastest and safest installation method that will not harm the normal operation of the device.

After launching the software, you must agree to accept the license agreement.

The program will ask for permission to make changes to the HDD (hard drive). Click "allow".

Next, you need to select a folder or location where to save it. Or the location you want to remember will be selected by default.

You can change the name to something more convenient for yourself, for ease of search if necessary.

To avoid installing unnecessary programs, services, and advertising, you should uncheck all unnecessary boxes before installation.

Sound card (for Windows, for Mac)

The built-in sound card in a PC or laptop can be replaced with a more professional one. After removing the plug from the rear panel of the processor, install the device into the PCI slot and secure it using the mounting screw.

Or use a USB card.

When you purchase a new audio card, it comes with drivers for its installation and management. If they are not available, you can download them for free from the Internet.

How to download to smartphone

To install the application on your mobile device, you must go to Google play or the App Store. By clicking on “search”, type the name or select from the categories offered.

Next, click on the icon to open all the information about the application: ratings, reviews, size, interface, Russification, licensing, device compatibility, and much more.

“Download”, then “open”. The software will appear on the working background of the smartphone, after which it can be opened and used.

The following is an overview of the Top 10 applications for working with sound, with a description of their characteristics.

Real-time audio processing

Sound in Sonar can be processed in two ways - online and off-line.

Online processing—that same real-time processing—does not change the shape of the original signal: you tell the program that when playing a given track, it should send to the output not the original signal itself, but one processed by some effect or even several. Processings are calculated on the fly, right during the playback process, their parameters are also adjusted on the fly - everything is great, unless, of course, you take into account the heavy processor load, which is inevitable. And sometimes it’s so difficult not to take this into account!.. By offline processing we mean approximately what we talked about in the section about Sound Forge. This is when we process an audio file with some kind of compressor or reverb, which causes the original signal to be rebuilt, and the output produces a wave of a completely different shape, with different sound characteristics - a different type, and often a different size. In this case, the original file disappears, unless, of course, we bothered to save a copy of it. Sonar comes with quite a lot of different processing options. Some of them can be used both in real time and offline, others only offline. At first we will talk only about online ones, and leave the conversation about offline ones for later1. Let's return to the sonar mixer, which still has some controls worth looking at. I'm referring primarily to the black rectangles located at the top of the mixer just below the track names (see Figure 4.73). On some paths the rectangles are empty, on others you see some inscriptions and light squares. What it is? This is the place where we will place real-time processing - the area for placing processing. To add processing to any of the tracks, we must right-click on this black rectangle and select something from the Audio Effects or MIDI Effects submenu. We will immediately be shown a processing dialog box (each will have its own), we can configure everything, and then just close this window. Now we will have a line with a reverb, equalizer, delay or compressor. Double-clicking the line again brings up the effect dialog box, allowing you to change parameters. And the square in this line will allow us to temporarily disable and re-enable processing: when the square is green, processing is enabled, click on it and it will turn yellow and processing will be disabled. True, processing of MIDI tracks is done without squares (for example, the echo on the Xylophone track in Fig. 4.73). To disable such processing, you simply need to delete it: right-click and select the Delete line or press the Del key.

Rice. 4.73. Real-time processing in the mixing console:

Processings can be dragged with the mouse from one line to another, as well as copied (by dragging with “control”). The mixer is not at all a monopolist when it comes to adding effects. It is quite possible to add them directly in the project window - through the context menu of the FX cell (on the FX or AI page). True, there is much less space for the location of the inscription and the on/off square - two treatments may not fit entirely. If you look closely at the FX line in Figures 4.16 and 4.17, you will see there arrows to the right and left, with which you can scroll through the list of treatments. You can also add some processing to the output—to the master section—and then they will affect the entire output signal, that is, they will become common processing for all tracks. Please note that on the right under the letter A there is also a processing area where you can put, for example, a compressor, reverb or something else. The project window also has a line with the letter A, which is used in a similar way.

Let's now look at the wide column to the left of the master, in which there are a pair of sections with round adjustment knobs - these sections are called Aux 1 and Aux 2′. The treatments that we can place here also affect several tracks at once, but not all and not one hundred percent. Like this? And what is it? And for what? During playback, the sequencer takes the sounds of individual tracks, mixes them and sends them to the output of the sound card. Aux 1 and Aux 2 represent alternative, parallel audio paths where group processing can be placed. Any tracks can be sent in this roundabout way, processed, and the result mixed into the output signal. Remember how in some soundford processing we mixed the dry signal with the processed one? So, with butterflies everything is exactly the same2. In the sections of audio tracks, immediately below the processing area, you can see a pair of horizontal sliders with numbers 1 and 2 (in Figure 4.72 the cursor points to one such slider). The sliders determine whether the signal from a given track will go through Aux 1 and Aux 2, and if so, with what amplification or, conversely, attenuation. The tooltip says something like the following about the state of the box: Track 3 Aux 2 Send Level = -4.2 dB. Translation: from track 3 the signal is sent (send) to Aux 2, attenuated by 4.2 decibels. When the slider is in the leftmost position, the signal from that track to that Aux is disabled3. On the “Aux”14 itself there are also a pair of regulators, in the form of round knobs that can be turned with the mouse. The first knob sets the gain or attenuation of the signal collected from different tracks and sent to AUX. And the second is the amplification or attenuation of the processed signal, which will be mixed into the main sound channel.

In the context menu of all these engines you will find the Arm for Automation command familiar from the last chapter. This means that any manipulations with them can also be recorded and then automatically reproduced. Here you have, in addition to everything, the ability to enhance or weaken the effect as the composition progresses. For example, insert a long echo with multiple repetitions at the ends of phrases. It is clear that some envelopes are also drawn here. They are called Aux Bus I Send Pan (panorama on aux 1), Aux Bus 1 Send Level (volume on aux 1), Aux Bus 2 Send Pan, Aux Bus 2 Send Level. By going to the Envelopes > Track submenu in the context menu, you will find the same lines there, so you can create automation with the mouse - moving the nodes along a curve. In general, you will find this mechanism - with parallel signal paths and the ability to mix the processed signal with the dry signal - in any multi-track audio processing system - both software and hardware. As a result, each track of our project can have its own processing (for example, an equalizer to correct the frequency response, a reverb to place in space, some kind of chorus flanger). Tracks for which the same processing is required can be additionally processed on “aux”, and, which is very important, one is stronger, the other is weaker. And in conclusion, the entire composition, for example, is compressed as a whole, limited in level, well, and so on. There are many options, there is only one selection criterion - your taste. And, of course, experience.

Online processing from the Sonar kit

As you begin to explore the treatments in the Cakewalk Sonar XL Version 2 kit, you will quickly discover quite a few treatments of the same type in different variations and from different manufacturers. By right-clicking on the FX line, we get the Audio Effects menu, and there are two submenus in it - Cakewalk and Timeworks, which contain real-time effects. Choruses, reverbs, equalizers, compressors - two of each. And if you also look at the Sonic Foundry submenu (which will appear automatically as soon as you install Sound Forge)!.. And add offline processing, which also only contains two, four, six, eight!.. The point is that , that the treatments differ not so much in appearance and the set of parameters that are allowed to be changed in them, but in mathematical algorithms, and ultimately - in the characteristics of the sound that we get at the output. That’s why they give us different plugins to choose from, so that we can choose what suits us best. These subtle things are determined mainly by ear. That’s why the assessments here are subjective, like judging in figure skating. In any case, I won’t meddle in this book with my opinions about the quality of processing - you’ll see everything for yourself. Some of you will probably want to install processing from other companies over time, the sound of which will seem even better to you. Fortunately, the choice in this area is quite wide. On disks with programs and on the Internet you can find a lot of DirectX plug-ins that Sonar can work with. However, in this book we will limit ourselves to considering only our own sonar processing, and even then not all of them briefly, otherwise until the very end of the book we would have had only plugins, plugins, plugins...

Online equalizers

Let's start with equalizers, which, as a rule, begin any work on sound processing. (We talked about what this is and what it is used for in the Soundforge section, chapter “Equalizers”.) We will have two such devices at our disposal:

- own sonar equalizer, which is called from the Cakewalk submenu with the FxEq command,

- and “alien” (from Timeworks), which is called up from the Timeworks submenu with the Equalizer (Sonar XL) command. As the name suggests, this treatment only comes with the advanced Sonar XL variety.

Figure 4.74 shows the Cakewalk FxEq equalizer.

Recalling a similar Soundforge product, one could call it not parametric, as the developers believe it to be, but paragraphic, because here the frequency response is presented in graphical form. Rice. 4.74. Online equalizer Cakewalk FxEq:

We have ten bands for separate tuning: eight regular bands, band bands for the middle of the range (1-8), and two edge bands (low-frequency bump 1o and high-frequency bump hi). By clicking on the fader or the button from the set line, we select which of the bands we want to configure now. On the button with the number, this click seems to light a light bulb. Now on the left side of the window we can change the parameters of this range, and the program will understand what exactly we are configuring. Using the buttons in the on line, you can temporarily exclude ranges from processing.

Let's take a closer look at the left side of the window. In the top line on the left you can read the number of the range that we are going to configure and the gain set for it. You are not allowed to enter anything here by hand - use faders. In the second line - Center frequency - we set the frequency of the middle of the range (in hertz) - roughly in the coarse box and exactly in the fine box. You can enter the frequency in numbers, or you can use the small sliders under the input windows. The Bandwidth line controls the band width (quality factor). In the coarse box you specify an approximate value, and in the fine box you specify an exact value. The nameless window in the fourth row sets the gain scale for the fader. In our picture, this is set to 15 dB, which means the fader can change the signal by ±15 dB. If the Bypass button is pressed, the equalizer is turned off, so you can compare what was and what is now. The L button connects only the left channel to processing, R - only the right, and L+R - both. To the right of all the faders is the general gain control, which is called Trim here. As soon as you move this slider, the entire curve on the diagram will move down or up.

Let me remind you that a signal that has been normalized to 0 dB cannot be amplified unless you want distortion. Make sure that the peaks of the curve do not rise to the top.

The floppy disk button will allow, as you probably already guessed, to save processing parameters in the form of a sample or preset. The preset appears in the drop-down list at the top of the window. The button with a cross deletes unnecessary presets. You can set up real-time effects without closing the program dialog box - just play the file, turn the knobs and listen to what happens. Every beginner is initially confused by the absence of an OK button in online processing dialog boxes. As well as the Cancel buttons. But this does not mean that the effect window should be constantly open. You can close the effect window at any time by clicking the cross in the upper right corner. The window will close, but the line in the list of assigned treatments will remain. To delete an effect, right-click on its line and select the magic word Delete from the menu. A high-quality mastering parametric equalizer from Timeworks is shown in Figure 4.75. There are six regular band bands and two edge bands. For each range, the average frequency (Freq slider), quality factor (Q slider) and volume level (Gain) are set. In addition, each range has a Reset button, which sets the gain to zero, and a Bypass button, which temporarily disables this range.

Rice. 4.75. Timeworks Equalizer in normal mode:

The overall output level is set by the horizontal Outgain slider. Clicking on the Graphic Mode inscription in the upper right corner switches the equalizer to graphic mode (Fig. 4.76), in which instead of sliders there will be balls on the curve, and you can drag these balls left and right, changing the frequency, and up and down, changing the gain.

Rice. 4.76. Timeworks Equalizer in graphical mode: enable range:

The panels to the right and left of the graph have balls of the same colors as on the graph. Clicking on such a ball turns on/off the corresponding range. You can enter the quality factor and all other parameters of the range in the windows located near its ball. In short, you see that the principles of interface construction for plugins can be very diverse. The very effects they perform remain the same. Well, it’s true that the output sound is still different. The Timework plugin performs 64-bit digital processing, resulting in much less specific digital distortion. True, at the same time the volume of mathematical calculations is growing significantly. That is why such high-quality processing is usually used at the final stage of work on a project - during mastering.

Online reverbs

There are also two online reverbs in the Sonar kit, but both are Kay-Kuok ones1. In the Cakewalk submenu to call them there are lines Cakewalk Reverb and Cakewalk FxReverb. The window of the first of them is shown in Figure 4.77.

Rice. 4.77. Cakewalk Reverb:

The Dry Mix and Wet Mix knobs control the dry and wet signal levels. If you wish, you can enter the level by hand in the rectangle under the handle. The Link button connects the handles of the dry and processed signals, causing them to rotate synchronously, but in different directions: when the dry signal is strengthened, the processed signal is weakened. The Decay(s) knob controls the length of the effect's tail and the amount of reflection of the sound. The Bypass button turns off the reverb. The nature of the reverberation is also affected by the position of the echo type switch: Sparse Echo (sparse echo) - sets a rather rare reflection of the sound, which is closest to the echo effect, Dense Echo (dense echo) - sets a thicker sound, No Echo disables repeated reflections. Well, at the bottom there are two knobs that control the low-pass filters (LP Filter) and high-pass filters (HP Filter). The first sets the frequency above which, and the second - below which reverberation is not performed, so as not to hum or whistle. Using the Active buttons, these filters are turned on and off.

Rice. 4.78. Reverb FxReverb:

The second reverb is called Cakewalk FxReverb (see Figure 4.78). Even from the design you can see that it is newer, and there are more opportunities to customize the sound to your liking. The Room Size fader sets the size of the room in which we place this instrument or vocalist. The higher the engine, the larger the room. (By moving this fader directly during playback, be prepared for the sound to squeak.) In general, plugin developers quite often offer us a kind of room modeling: in addition to the usual parameters, they offer to enter the dimensions of the hall, the distance of the sound source from the walls and from the listener and other purely geometric parameters. The High/Rolloff engine is a high pass filter. New to us, the High / Decay engine sets the decay time for high frequencies. The Density engine affects the number of reflections and how they blend with each other. The higher the Density, the more sound reflections there are. Using the Motion Rate and Motion Depth engines, you can seem to create the impression of movement of the sound source. Somehow I wasn’t very good at it. What came out was something more similar to some kind of phaser. The help says about Motion Rate that it can be used very aggressively on tracks with percussion. Try it, maybe you'll succeed. But only be sure to be aggressive.

Flanger, chorus, real-time delay

A couple of plugins also provide some other processing - chorus, flanger, delay. In general, the first generation of sonar plug-ins (Chorus, Flanger, Delay, and also Reverb) was developed quite a long time ago and was notable not only for its high sound quality, but for its ease, efficiency,; low consumption of computer resources. You can add quite a lot of such treatments without the risk of causing Drop Out. The second generation of plugins (Fx Chorus, Fx Flange, Fx Delay, Fx Reverb) is more modern, flexible, rich in new features. They combine cost-effectiveness with higher quality processing.

Rice. 4.79. Cakewalk FxFlange two-channel flanger:

For example, a flanger (see Fig. 4.79) consists of two flangers - select Voice 1 or Voice 2 and set your own processing parameters for each voice - delay (Delay), feedback amount (Feedback), modulation frequency (Mod . Freq.) and panorama (Pan). At the exit everything is mixed. It may be more correct to use the Pan engine to distribute the results of each of the processing across different channels of the stereo pair, or at least direct them to different points of the stereo panorama. When Pan is zero, the processed sound is placed exactly in the center of the pan, at -1 it is placed in the left channel, and at -1 it is placed in the right channel. The G (Global) control moves the gain faders synchronously across both voices, changing the overall gain of the processing output. The chorus has as many as four independent voices (see Fig. 4.80). Each one has its own delay (Delay), modulation depth and frequency (Mod Depth and Mod Freq.), panorama (Pan) and, of course, gain (Gain). Four voices and a delay (see Fig. 4.81). Only here, for each of the voices, in addition to panning and gain, the value of the delay itself is set (roughly - coarse and precisely fine), as well as feedback (feedback) - one of the most significant parameters that determines what part of the input signal will be applied to the delay (at 0 the signal goes without processing)1.

Rice. 4.80. Cakewalk Fx Chorus:

In the Cakewalk submenu you will also find the Tare Sim line, which simulates the sound of a tape, including the inescapable tape hiss (Hiss), which once cost so much effort to combat...

Rice. 4.81. Cakewalk FxDelay:

Guitar amplifiers

As all guitarists know, it is better to record an electric guitar not from a wire that comes directly from a pickup or preamp, but in a roundabout way. The guitar is connected to a amp (a tube or transistor amplifier with speakers), and a microphone is placed near the amp, from where the sound is recorded. This makes the electric guitar sound much better. Sonar includes two processing units that simulate the sound of a amp: the Amp Sim sonar plugin and the ReValver plugin from Alien Connections.

Rice. 4.82. Guitar Amplifier Amp Sin:

In Figure 4.82 you can see the Amp Sim effect dialog box. In the list of presets you will find several interesting lines that will explain and show you a lot, and I will briefly tell you what the engines control and what is selected in the drop-down lists. The Drive slider sets the gain of the signal going to the distortion, thereby influencing the amount of distortion: the lower the slider, the less distortion. The EQ section controls the tone of the signal being processed. Bass controls the low frequency band around 60 hertz, Mid controls mid frequencies around 600 hertz, and Treb controls high frequencies (6 kHz). The Presence engine controls the “presence effect”. In fact, this is a filter that cuts off frequencies below 750 hertz. This filter is located after the distortion block and processes the final signal. In the Amp Model list, select the amplification mode - with or without overload, and if with overload, then what type. See for yourself what types are there. In addition to this, the Bright line brightens the sound by raising frequencies above 500 hertz. This is roughly how similar buttons on real guitar amps work. In the Cabinet Enclosure list you can select the size of the combo speakers and their number. The check mark in the Open Back line simulates a speaker with an open back cover. At the same time, part of the bass seems to go back, is not reflected from the wall and does not get into the microphone; The Off-Axis line imitates the placement of the microphone off-axis of the speaker, thereby coloring the sound, and the setting works differently in different models of amps and amplifiers. It has a particularly strong effect on frequencies around 1 kHz. The Tremolo section simulates the effect of, naturally, a tremolo. The Rate slider sets the speed of the tremolo, the Depth sets the modulation depth, and the Bias controls the way the tremolo is applied: the sound is either attenuated and returned to its original position (far left), or amplified and returned (right), or both (switch to the far left). all other provisions). The Sonar kit comes with a ReValver guitar amplifier, however, with the bad letters SE (something like a short edition - a stripped-down edition), which in this case indicate the absence of some important processing. They are trying to sell us the full version for $100.

Rice. 4.83. Guitar amplifier ReValver:

ReValver consists of several modules for sound processing, which are activated sequentially, from top to bottom (see Fig. 4.83): first, the module that you place at the top of the device will work with sound, then the second, etc. The Load button will allow you to load one of the standard sets arrangements from the groups Blues, Metal, Pop, Rock and Special. In fact, these are presets, although they are not called that. To add a module to the end of the chain, click on the free space below and a single line will pop up: Insert Module Here. And to place a module between two previously inserted ones, click on the line between them. You will be shown a module selection window (see Fig. 4.84). The modules are divided into four groups: preamps (Preamps), power amplifiers (Poweramps), studio effects (Effects/misc) and speakers (Speakers). Having selected a module in the list, you read its description on the right.

Rice. 4.84. Selecting treatments:

The rules for connecting modules are as follows:

- the amp should always be placed after the power amplifier;

- the power amplifier should follow the studio effects or preamplifier;

- studio effects should be placed after reverberation (this is called Room - imitation of a room when working with a amp), after speakers and preamps, or, conversely, at the very beginning of the chain;

- the imitation of the room should be located after the speaker;

- The preamp should follow the studio effects or be placed at the beginning of the chain.

There are a couple of exceptions to these rules: the Trim pot and Parametric fj| modules.

ters (there is none in the shortened version) can be placed anywhere. You can load the characteristics of the room by clicking on the Load button on the combi modules. They are stored on disk in files with the four-letter extension room. I won’t talk about the specific settings of this or that module, you can look at them yourself. There, in general, everything is more or less familiar. And power amplifiers and some speakers are not adjustable at all. They exist as a fact of our life. Online compressors There are a lot of devices for dynamic sound processing in Sonar. The Timeworks menu includes the mastering plug-in CompressorX (Sonar XL), and the Cakewalk menu has four more commands, all of which perform dynamic processing operations: Fx Compressor/Gate - compressor and gate, Fx Expander/Gate - expander and gate, Fx Dynamics Processor - compressor and any other dynamic processing, and Fx Limiter is, of course, a limiter. In the Soundforge section (chapter “Dynamic level processing”), we already figured out what it all is and how it is configured. In Figure 4.85 you see the dialog box for the most versatile of Sonar's processing tools, the dynamics processor. The rest of the treatments look almost the same, but they are more specialized. Accordingly, they have fewer pens of all kinds and more modest capabilities. But nothing superfluous. If you start rearranging the input-output graph with the mouse, make sure that the Expander/Gate plugin allows you to move only two nodes of the curve: the lower one, which intersects the horizontal axis, and the break point, Compressor/Gate - four points (top, bottom and two in the middle) , and Dynamics Processor allows you not only to drag and drop all the nodes, but also to create new ones, that is, to create absolutely any dependence of the output level on the input level.

Rice. 4.85. Cakewalk FX Dynamics Processor:

If the Soft knee button is not pressed, then the graph consists of straight line segments, but if you press the button, the program will round the corners. Accordingly, the processing will be softer. Dynamics Processor uses three operating algorithms, which are selected in the Detection Algorithm section:

- Peak (processing by peak levels) is used mainly in limiters - so that not a single peak, even the shortest one, causes overloads;

- Average is most often used to process instrumental solos;

- RMS (Root Mean Square) is suitable for processing speech and vocals.

In addition, in the Stereo Interaction section you can select one of two stereo sound processing algorithms:

- if the Maximum button is pressed, then processing proceeds in the usual way: the sound in the channels is amplified or attenuated to the same extent in accordance with the signal levels in them;

- if the Side Chain button is pressed, the program looks at the levels only in the left channel, and applies processing only to the right. The authors of the program offer in such a strange way to control the accompaniment levels depending on the vocal levels.

Fx Limiter is the simplest of the dynamics commands.

It doesn’t even have a graph in the window, and of all the knobs there are only two - general gain (Output Gain) and the maximum level, beyond which nothing should be louder (Limiter Thr). This level can be set between -40 and -0.1 dB. Rice. 4.86. Timework CompressorX:

Figure 4.86 shows another example of programming art - a 64-bit compressor from Timework. There are no graphs here, just sliders, buttons and a level indicator. If you more or less understand the parameters of the compressor, you will understand how to use this device, just read the names of the engines. Well, and of course, see what parameters are offered for certain presets.

Online processing of MIDI tracks

There is no such abundance of processing for MIDI tracks as there is for audio, and probably never will be. The list of MIDI Effects includes the already mentioned Arpeggia-tor (see the chapter “Guitar Neck”), there is a drum machine (see the chapter of the same name), there is Quantize, transpose, volume, delay and other effects that are rarely used as online processing. But the main sound processing - reverb, compressor, equalizer - are completely absent from the MIDI Effects list. What's the matter? The fact is that such transformations of MIDI sound as reverb, compression and equalization are usually performed by the effect processor of the sound card. If there is no such thing on your map, then there are no processing options. And if there is, then he does it himself, without the help of a sequencer. Well, no, and don’t! All the same, at the end of the work you will need to convert MIDI music into audio (unless, of course, the goal of your work is not a MIDI file without live sound, but a music CD or 33-file for posting on the Internet). Translate it and then process it. If you've used one of the online MIDI effects, you can apply that effect to a track (apply an effect) by selecting that track and running the Apply MIDI Effects command from the Process menu. Let's say that an online drum machine does not draw any notes on the track; the track can remain completely empty. After you apply the effect, notes will appear on it.