Articles • May 1, 2020 • Sannio

The central processing unit is often called the “brain” of a computer, because, like the human brain, it consists of several parts assembled together to work on information. Among them are those responsible for receiving information, storing, processing and outputting it. In this article, TechSpot breaks down all the key elements of the processor that make your computers work.

This text is part of a series of articles that thoroughly examine the operation of key computer components. In addition, if you are interested in the topic, we recommend that you read the translations of the articles in the series “How are processors developed and created?”

This article will cover both the basics of processors and more advanced concepts. Unfortunately, some abstractness cannot be avoided, but there are reasons for this. For example, if you look at a power supply, you can easily see all its parts - from capacitors to transistors, but in the case of processors everything is not so simple, because we physically cannot see all the chips, and Intel and AMD are in no hurry to share the details of their operation products with the general public. However, the information presented in this article applies to the vast majority of modern processors.

So let's get started. Any computing device needs something like a central processing unit. Essentially, the programmer writes code to accomplish his own goals, and then the processor executes it to produce the desired result. The processor is also connected to other parts of the system, such as memory and input/output devices, to ensure that the necessary data is loaded, but we will not focus on them in this article.

The foundation of any processor: instruction set architecture

The first thing you come across when disassembling any processor is the instruction set architecture (ISA). The architecture is something like the foundation of the processor and it determines how it works and how all the internal systems interact with each other. There are a huge number of architectures, but the most common are x86 (mainly in desktop computers and laptops) and ARM (in mobile devices and embedded systems).

Slightly less common and more niche are MIPS, RISC-V and PowerPC. The architecture of the set is responsible for a number of basic things: what instructions the processor can process, how it interacts with memory and cache, how the task is distributed across several processing stages, etc.

To better understand the structure of the processor, let's analyze its elements in the order in which commands are executed. Different instruction types can follow different paths and use different CPU components, so they will be summarized here to cover as much as possible. Let's start with the basic design of single-core processors and gradually move on to more advanced and complex examples.

Energy consumption and heat dissipation

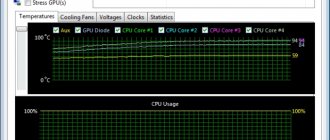

Energy consumption itself directly depends on the technology used to produce processors. Smaller sizes and higher frequencies are directly proportional to power consumption and heat dissipation.

To reduce power consumption and heat dissipation, an energy-saving automatic system for adjusting the load on the processor is used, respectively, in the absence of any need for performance. High-performance computers must have a good processor cooling system.

To summarize the material in the article - the answer to the question of what a processor is:

Today's processors have the ability to multi-channel work with RAM; new instructions appear, which in turn, thanks to which its functional level increases. The ability to process graphics with the processor itself reduces the cost of both the processors themselves and, thanks to them, office and home computer assemblies. Virtual cores appear for a more practical distribution of performance, technologies develop, and with them the computer and its component such as the central processor.

- Back

- Forward

Comments

-2 Evgeniy 03/26/2016 11:03

how the processor itself works, namely the stone, what are its insides

Reply Reply with quote Quote

Update list of comments

Add a comment

What is a sound card and what is it for?

What is an SSD? Solid State Drive

What is a processor, central processing unit, CPU?

What is a motherboard or system board and what is it used for?

What is HDD, hard drive and hard drive

What is RAM and Random Access Memory

Control unit and executive path

The processor elements can be divided into two main ones: a control unit (also known as a control machine) and an executive tract (also known as an operating machine). In simple terms, a processor is a train in which the driver (control automaton) controls various elements of the engine (operating automaton).

The execution path is like a motor and, as the name suggests, it is the path through which data is transferred while it is being processed. It receives input data, processes it and sends it to the desired location after the operation is completed. The control unit, in turn, directs this data flow. Depending on the instruction, the execution path will route signals to various processor components, turn different parts of the path on and off, and monitor the state of the entire processor.

Block diagram of the core processor. Black lines show the data flow, and red lines show the command flow.

Command Loop - Fetch

The first thing the processor must do is determine which instructions need to be executed next, and then move them from memory to the control unit. The instructions are generated by the compiler and are specific to the set architecture (ISA). The most common basic instruction types (such as load, store, add, subtract, etc.) are common to all ISAs, but there are many additional, special instruction types unique to a particular set architecture. The control unit knows which signals need to be sent where to execute a certain type of command.

For example, when you run an .exe file in Windows, the code of this program is sent to memory and the processor receives the address from which the first command begins. The processor always maintains an internal registry that keeps track of where the next command will be executed from. This register is called the program counter.

Once the processor has determined the point at which the loop should begin, the instruction is moved from memory to the aforementioned register - this process is called instruction fetching. In a good way, the command is most likely already in the processor cache, but this issue will be discussed a little later.

Software problems

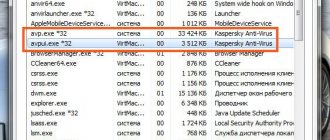

The most likely reason why the CPU is running at 100% is because programs are running in the background. Quite often you can encounter a situation where a resource-intensive application that the user was working with is not completely unloaded from memory. To some extent, this can be considered a type of “freezing.” The difference is that a frozen program does not allow its “window” to be closed and does not respond to commands. In this case, the “window” closes, but the process remains in RAM. The computer considers such a program to be running and continues to allocate computing resources to it. As a result, the processor is loaded at 100 percent while there is visually no running tasks.

Windows 7 Task Manager

So, let's see what to do in Windows 7 when faced with such a problem. Microsoft gives us a full-fledged OS process management tool. To use it, just call the context menu on the taskbar.

Select the item marked in the screenshot to launch the dispatcher.

Open the “View” item in the top menu and go to the marked position.

To quickly identify the “culprit”, check off the indicated items. Now our dispatcher is ready for use. The window parameters are remembered, so next time no additional settings will be required.

We enable sorting by the “CPU” column, which displays the load percentage. The “culprit” is immediately visible. In this case, it is an archiving program that consumes half of the system resources. Select the process and click the button circled in the screenshot to forcefully terminate its activity.

Command Loop - Decoding

When the processor receives a command, it needs to determine exactly what type of command it is. This process is called decoding. Each instruction has a special set of bits, an opcode, which allows the processor to recognize its type. The computer's recognition of various file extensions works on approximately the same principle. For example, .jpg and .png are both image formats, but they each process data differently, so the computer needs to accurately recognize the type.

It's worth noting that the complexity of decoding may depend on how advanced the processor's instruction set architecture is. The RISC-V architecture, for example, has several dozen instructions, while x86 has several thousand. For a typical Intel x86 processor, the decoding process is one of the most complex and takes up a huge amount of memory. The most common instructions processors decode are memory, arithmetic, and branch instructions.

Arithmetic logic unit

Let's return to the command execution stage. Let's immediately note that it is different for all three of the above types of commands, so let's look at each of them.

The easiest to understand are arithmetic commands. These instructions are sent to the Arithmetic Logic Unit (ALU) for further processing. The device is a circuit that most often operates on two values marked by a signal and produces a result.

Imagine a regular calculator. For any calculation, you enter the values, select the required arithmetic operation, and get the result. An Arithmetic Logic Unit (ALU) operates on a similar principle. The type of operation depends on the instruction opcode that the control machine sends to the ALU, which, in addition to basic arithmetic, can perform bit operations such as AND, OR, NOT, and XOR on the values. In addition, the arithmetic logic unit outputs information about the calculation performed for the control machine (for example, whether it was positive, negative, zero, or caused an overflow).

Although the arithmetic logic unit is most often associated with arithmetic operations, it also finds its application in memory or branch instructions. For example, if the processor needs to calculate a memory address given by a previous calculation, or if it needs to calculate a jump to add to the program counter if the instruction requires it (example: "if the previous result is negative, jump forward 20 instructions").

How to find out the processor used in a computer

You may need to find out what processor is in your computer to determine whether you can install some complex program or game. This information is also needed when upgrading your computer. You can obtain this information in several ways.

The easiest and fastest way to find out is to right-click on the “Computer” icon located on the desktop and select “Properties”. Among other things, the manufacturer, model and clock speed of the processor will be indicated. More detailed information including other processor characteristics such as core, process technology, socket, stepping, cache, and so on can be found using special programs. We recommend using the simple and convenient CPU-Z program, which shows a lot of useful information about your system. Data about the central processor is collected on the CPU tab.

Commands and memory hierarchy

To better understand how memory-related instructions work, it's worth paying attention to the concept of memory hierarchy - the relationship between the cache, RAM, and main storage. When the processor operates on a memory instruction for which it does not already have data in a register, it will move up the memory hierarchy until it finds the information it needs. Most modern processors have three cache levels: first, second and third. First, the processor will check for the presence of the necessary instructions in the first level cache - the smallest and fastest of all. Often this cache is divided into two parts: the first is reserved for data, and the second is for commands. Remember, instructions are retrieved from memory by the processor just like any other data.

A typical L1 cache may be several hundred kilobytes in size. If the processor does not find what it needs in it, it will move on to checking the second-level cache (several megabytes in size), and then the third (already occupying tens of megabytes). If the necessary data is not in the third level cache, the search will be carried out in RAM and then in storage devices. With each such “step,” not only the amount of available data increases, but also the latency.

Once the processor has found the necessary data, it sends it up the memory hierarchy to reduce search time, in case it is needed later. For reference: the processor can read data in the internal register in just one or two cycles, the first-level cache will need a little more, the second-level cache will need about ten, and the third - several dozen cycles. If you have to use memory or storage, the processor may need tens of thousands or even millions of cycles. Depending on the system, each processor core may have its own L1 cache, a shared L2 cache with another core, and a L3 cache for a group of four or more cores. We will talk about multi-core processors in more detail later.

Basic concepts of processor in computer science

What are threads in a processor

A thread of execution in a CPU is the smallest unit of processing assigned by the kernel necessary to separate the code and context of the executing process. There can be multiple processes simultaneously using CPU resources. There is an original development from Intel, which began to be used in models starting with the Intel Core i3 processor, which is called HyperThreading. This is a technology for dividing a physical core into two logical ones. Thus, the operating system creates additional computing power and increases threading. It turns out that the number of cores alone will not be decisive, since in some cases computers with 4 cores are inferior in performance to those with only 2.

What is a technical process in a processor?

In computer science, process technology refers to the size of transistors used in the computer core. The manufacturing process of the CPU occurs using the photolithography method, when transistors are etched from a crystal covered with a dielectric film under the influence of light. The optical equipment used has such an indicator as resolution. This will be the technological process. The higher it is, the more transistors can fit on one chip.

The reduction in crystal size is facilitated by:

- reduction of heat generation and energy consumption;

- performance, since while maintaining the physical size of the crystal, it is possible to place a larger number of working elements on it.

The unit of measurement for the process is nanometer (10-9). Most modern processors are manufactured using the 22 nm process technology.

The technical process is an increase in the number of working elements of the processor while maintaining its size

What is CPU virtualization

The basis of the method is to divide the CPU into a guest and monitor part. If a switch from the host to the guest OS is required, then the processor automatically performs this operation, keeping visible only those register values that are required for stable operation. Since the guest operating system interacts directly with the processor, the virtual machine will run much faster.

You can enable virtualization in the BIOS settings. Most motherboards and processors from AMD do not support the technology of creating a virtual machine using hardware methods. Here software methods come to the aid of the user.

What are processor registers

A processor register is a special set of digital circuitry that refers to the ultra-fast memory required by the CPU to store the results of intermediate operations. Each processor contains a great many registers, most of which are inaccessible to the programmer and are reserved for executing basic kernel functions. There are general and special purpose registers. The first group is available for access, the second is used by the processor itself. Since the speed of interaction with CPU registers is higher than access in RAM, they are actively used by programmers to write software products.

Jump and Branch Commands

The last of the three main types of commands is the branch command. Instructions in modern programs constantly jump from one process thread to another, which means that the processor rarely executes more than a dozen adjacent instructions without jumping. Branch instructions come from programming elements such as IF, FOR, and RETURN code. All of them are used to interrupt program execution or switch to another part of the code. In addition to branch commands, there are also jump commands, which differ from the first ones in that they always participate in the process of program execution.

In addition to regular jump instructions, there are also conditional jumps, which are especially difficult for the processor to work with, since it can execute several instructions simultaneously and the final result of the entire branch may not be determined until work has begun on executing related instructions.

To understand why it is difficult for a processor to work with conditional branches, it is worth paying attention to such a thing as a computational pipeline. Each step in executing an instruction can take several cycles, which means that the arithmetic logic unit could sit idle while the instruction is fetched. To maximize the efficiency of the processor's processing power, each stage is divided into several parts - in a process called the computing pipeline (pipelining).

The simplest analogy would be the washing process. Let's say you have enough clothes for two full loads of washing machine, and each load takes an hour to wash and dry. You can easily load the first batch of clothes into the washing machine, then transfer them to the dryer, and when they are dry, do the second batch. It will take four hours. However, if you divide the process into steps and start washing the second load while the first is drying, you can complete the entire job in three hours. Time reduction depends on the number of items loaded and the number of washers/dryers. It would take two hours to complete a single download anyway, but in the example above, overlaying the processes increases the overall throughput from 0.5 downloads/hour to 0.75 downloads/hour.

Graphical representation of the pipeline used in AMD Bobcat (2011) processor cores. Notice how many different elements and stages there are in it.

Processors use the same principle to increase instruction throughput. The pipelines of modern ARM or x86 processors can use over 20 stages of the computational pipeline, which means that the processor core simultaneously processes over 20 different instructions. Processors may vary in how they divide these stages up for different purposes, but one publicly available example has 4 cycles for fetching, 6 cycles for decoding, 3 cycles for executing instructions, and 7 cycles for sending results to memory.

Returning to the topic, now you can understand what the problem is. If the processor has not determined the instruction type before the tenth cycle, then it will begin working on 9 new instructions, which may be unnecessary if the instruction branch is no longer working. To prevent this from happening, processors are equipped with a complex mechanism called a branch predictor module. The principle of operation of this mechanism is similar to machine learning. A detailed description of the operation of the transition predictor module is a topic for a separate article, so we will have to make do with a fairly simple explanation: this mechanism monitors the status of previous transitions to determine whether the next transition will be used or not. Modern transition predictors can provide an accuracy of 95% or higher.

After the transition result is known exactly (i.e., a specific stage on the pipeline has completed), the program counter is updated and the processor begins executing the next operation. If the result does not coincide with the one predicted by the command predictor, the processor will reset all commands that it started executing by mistake and start working from the correct point.

Structure

The general structure of any central processor consists of the following blocks:

- Interface block;

- Operating unit;

The interface block contains the following components:

- Address registers;

- Memory registers in which codes of transmitted commands are stored, the execution of which is planned in the near future;

- Control devices - with its help, control commands are generated, which are subsequently executed by the CPU;

- Control circuits responsible for the operation of ports and system buses;

The operating unit includes:

- Microprocessor memory. Consists of: segment registers, feature registers, general purpose registers and registers that count the number of commands;

- Arithmetic-logical device. With its help, information is interpreted into a set of logical or arithmetic operations;

Note! The operating block and the interface block work in parallel, but the interface part is one step ahead, writing commands to the register block that are subsequently executed by the operating part.

The system bus is used to transmit signals from the central processor to other components of the device. With each new generation, the structure of the processor changes slightly and the latest developments are very different from the first processors used at the dawn of computer technology.

Extraordinary execution

Now that you know how the three most common instruction types work, let's turn our attention to more advanced processor features. Almost all modern CPU models actually execute commands out of order in the order they are received. There is such a feature as out-of-order execution, designed to reduce the amount of time the processor is idle while waiting for other commands to complete.

If the processor realizes that the next instruction needs data that will take longer to find, it can rearrange the order of the instructions, starting work on an unrelated instruction while the search is taking place. Out-of-order execution of instructions is an extremely useful, but far from the only auxiliary function of the processor.

Another extremely useful feature of the processor is prefetching. If you time the time it takes to execute a random instruction from start to finish, you will find that most of the time is spent accessing memory. The prefetch unit is an element in the CPU that looks at the commands in the queue and determines what data they will require. If it notices that an operation requires data that is not already in the processor cache, it will fetch it from RAM and into the cache. Hence its name.

Accelerators and the Future of Processors

Another important feature that is increasingly appearing in processors is accelerators for specific tasks. These accelerators are small circuits whose main goal is to complete a specific task as quickly as possible. This task could be encryption, data encoding, or machine learning.

Of course, the processor can do all this on its own, but a unit created specifically for this purpose will be much more efficient. A clear indicator of the power of the accelerators will be a comparison of the built-in graphics processor with a discrete video card. Of course, the processor can perform the calculations needed to process graphics, but having a separate unit provides much higher performance. As the number of accelerators increases, the actual CPU core may only occupy a small portion of the chip.

The first picture below shows the design of an Intel processor released more than ten years ago, where most of the cores and cache are occupied, while the second shows a much more modern chip from AMD. As we see, in the second case, most of the crystal is allocated not to the cores, but to other components.

Intel processor chip of the first generation of the Nehalem architecture. Please note: cores and cache take up the vast majority of the area.

System-on-a-chip crystal from AMD. A lot of space is allocated for accelerators and external interfaces.

Types of processors

There are two main widely used processor manufacturers: AMD and Intel. They produce the most popular, affordable and productive models. We can see them on almost every computer or game console, for example, on the same PlayStation or Xbox.

All pros and cons are subject to change, because... Every year new models are released that are radically different from each other. But these points are characteristic of almost all models of these manufacturers.

Intel - pros and cons

- Low power consumption and operating temperature

- Good performance in graphics and video processing software

- Not so dependent on RAM

- Are better at multitasking

- The price is quite high compared to AMD

- The graphics chip, if present, is not as powerful as the competitor's

- Working with archives is not as fast as we would like

- Acceleration is not as variable

AMD - pros and cons

- High gaming performance

- Many models are quite "hot", but not all

- Adequate price

- Excellent speed of working with different programs and archives

- The graphics chip, if there is one, shows good results

- Good overclocking capabilities

- RAM dependent

Multi-core

The last feature of processors that will be discussed in this article is how several individual processors can be combined to create a multi-core processor. This is not just combining several copies of one core, because just as you cannot simply turn a single-threaded program into a multi-threaded one, you cannot do the same with a processor. The problem occurs due to kernel dependencies.

With four cores, the processor needs to send commands 4 times faster. You also need four separate interfaces for memory. It is precisely because of the presence of several cores on the same chip, potentially working with the same pieces of data, that the problem of coherence and consistency of their work arises. Suppose if two cores were processing a command using the same data, how does the processor determine which one has the correct value? What if one core modified the data, but it didn’t get to the second core in time? Because they have separate caches that may store overlapping data, complex algorithms and controllers must be used to resolve potential conflicts.

The accuracy of branch prediction also plays an extremely important role in multi-core processors. The more cores a processor has, the higher the likelihood that one of the executed commands will be a jump command, which can change the overall flow of tasks at any time.

Typically, separate cores process commands from different threads, thereby reducing dependency between cores. Therefore, when you open the task manager, you most often see that only one processor core is loaded, while the others are barely working - many programs are simply not designed for multi-threading from the start. Additionally, there may be certain cases in which it is more efficient to use only one processor core rather than wasting resources trying to separate commands.

Processor physical shell

Although much of this article has been devoted to the complex mechanisms of the processor architecture, we should not forget that all this must be created and operated in the form of a real, physical object.

In order to synchronize the operation of all processor components, a clock signal is used. Modern processors typically operate at frequencies between 3.0 GHz and 5.0 GHz, and the situation hasn't changed much over the past decade. With each cycle, billions of transistors turn on and off inside the chip.

Clocks are important to ensure that each stage of the computing pipeline runs perfectly. The number of commands processed by the processor every second depends on them. The frequency can be increased by overclocking, making the chip faster, but this in turn will increase power consumption and heat dissipation.

Photo: Michael Dziedzic

Heat dissipation is the main enemy of processors. When digital electronics heat up, microscopic transistors can begin to degrade. This in turn can damage the chip if the heat is not removed. To prevent this from happening, each processor is equipped with thermal spreaders. The crystal itself can occupy only 20% of the processor area, because increasing the area allows heat to be distributed more evenly across the heatsink. In addition, the number of available processor legs (contacts) designed to interact with other computer components is further increased.

Modern processors can have over a thousand input and output pins on the back panel. A mobile chip can be equipped with only a few hundred, since most of the computing elements are already located inside the chip. Regardless of design, about half of them are designed to distribute power, and the rest are designed to transfer data from RAM, chipset, drives, PCIe devices, etc. High-end processors that draw a hundred or more amps at full load require hundreds of legs to distribute evenly current They are usually plated with gold to improve conductivity. It is worth noting that different manufacturers place the legs differently across their many products.

What is CPU scalping?

CPU scalping is the procedure of removing the cover to replace thermal paste. Carrying out this procedure is one of the components of overclocking or may be required to reduce the load on the CPU hardware.

The procedure itself consists of:

- removing the cover;

- removing old thermal paste;

- crystal cleaning;

- applying a new layer of thermal paste;

- closing the lid.

When carrying out the procedure, you should take into account the fact that one wrong movement can lead to failure of the processor. Therefore, it is better to entrust this event to professionals. If the decision to carry out scalping at home is finally made, then we can advise you to purchase a special device in the form of a clamp for the CPU, which will make it easier to remove the cover without damaging the crystal.

Let's summarize with an example

To recap, let's take a quick look at the architecture of the Intel Core 2 processor. This was back in 2006, so some details may be outdated, but information about new developments is not publicly available.

At the very top is the command cache and the associative translation buffer. The buffer helps the processor determine where the necessary instructions are located in memory. These instructions are stored in the L1 instruction cache and then sent to the predecoder, since decoding occurs in many stages due to the complexities of the x86 architecture. Immediately following these are a branch predictor and a code prefetcher, which reduce the likelihood of potential problems with subsequent commands.

Next, the commands are sent to the command queue. Recall how out-of-order execution allows the processor to select exactly the instruction that is most practical to execute at a particular moment from the queue of current instructions. After the processor has determined the desired command, it is decoded into many micro-operations. While a command may contain a task that is complex for the CPU, micro-ops are granular tasks that are easier for the processor to interpret.

These instructions then go to the register alias table, the reordering buffer, and the reservation station. Unfortunately, it will not be possible to describe their operating principles in detail in one paragraph, since this is information that is usually presented in the final courses of technical universities. In a nutshell, they are all used during out-of-order execution to manage dependencies between commands.

In fact, each processor core has many arithmetic logic units and memory ports. Commands are sent to the reservation station until the device or port is free. The command is then processed using the L1 data cache and the result is stored for later use, after which the processor can move on to the next task. That's all!

While this article is not intended to be a comprehensive guide to how each processor works, it should give you a basic understanding of their inner workings and complexity. Unfortunately, only Intel and AMD employees know how modern processors actually work, so the information described in this article is just the tip of the iceberg, because every point described in the text is the result of a huge amount of research and development.

What does the processor consist of?

To understand how a CPU works, you need to understand what parts it consists of. The main components of the processor are:

- Top cover , which is a metal plate that performs the functions of protecting the internal contents and dissipating heat.

- Crystal . This is the most important part of the CPU. The crystal is made of silicon and contains a large number of tiny microcircuits.

- Textolite substrate , which serves as a contact pad. All parts of the CPU are attached to it and contacts are located through which interaction with the rest of the system occurs.

When attaching the top cover, a sealant adhesive is used that can withstand high temperatures, and thermal paste is used to eliminate the gap inside the assembled processor. After solidification, it forms a kind of “bridge”, which is required to ensure the outflow of heat from the crystal.

What is a processor core

If the central processing unit itself can be called the “brain” of the computer, then the core is considered the main part of the CPU itself. The core is a set of chips located on a silicon pad, the size of which does not exceed a square centimeter. The set of microscopic logical elements through which the operating principle is implemented is called architecture.

A few technical details: in modern processors, the core is attached to the chip platform using a “flip-chip” system; such joints provide maximum connection density.

Each core consists of a certain number of functional blocks:

- block for working with interruptions , which is necessary for quickly switching between tasks;

- instruction generation unit , responsible for receiving and sending commands for subsequent processing;

- decoding block , which is needed to process incoming commands and determine the actions required for this;

- control unit , which is responsible for transmitting processed instructions to other functional parts and coordinating the load;

- the last ones are the execute and save blocks .

What is a processor socket

The term socket is translated from English as “socket” or “connector”. For a personal computer, this term simultaneously refers directly to the motherboard and processor. The socket is where the CPU is mounted. They differ from each other in such characteristics as size, number and type of contacts, and cooling installation features.

The two largest processor manufacturers - Intel and AMD - are waging a long-standing marketing war, each offering their own socket, suitable only for the CPU of their production. The number in the marking of a specific socket, for example, LGA 775, indicates the number of contacts or pins. Also, in technological terms, sockets may differ from each other:

- the presence of additional controllers;

- the ability of technology to support the graphics core of the processor;

- productivity.

The socket can also affect the following computer operating parameters:

- type of supported RAM;

- FSB bus frequency;

- indirectly, on the PCI-e version and the SATA connector.

The creation of a special socket for mounting the central processor is required so that the user can upgrade the system and change the CPU in the event of its failure.

The processor socket is a socket for installing it on the motherboard

Graphics core in the processor: what is it?

One of the parts of the CPU, in addition to the main core itself, can be a graphics processor. What is it, and why is the use of such a component required? It should be noted right away that integrating a graphics core is not mandatory and is not present in every processor. This device is required to perform the basic functions of the CPU in the form of solving computing problems, as well as graphics support.

The reasons why manufacturers use technologies to combine two functions in one core are:

- reduced power consumption because smaller devices require less power and cooling costs;

- compactness;

- cost reduction.

The use of integrated or built-in graphics is most often observed in laptops or low-cost PCs intended for office work, where there are no excessive graphics requirements.